Introduction

This memo aims at discussing the peculiarities of an evaluation design together with describing a plan for collecting empirical evidence and identifying potential indicators to answer the already developed evaluation questions about the effects of co-curriculum activities on the achievements of student veterans within the Armed Services Arts Partnership Program (ASAP) program. One of the key aspects of the evaluation of the ASAP program is to improve the quality of life and reintegrate veterans into their communities through identifying human resources, social attitudes, and veterans’ needs and using new stakeholders, training activities, and social media sources.

Therefore, it is necessary to monitor the processes in which current veteran students are involved and understand the reasons why some areas are successful and some areas remain less successful. This outcome evaluation should help to examine the results achieved by the ASAP team in regard to the expectations of veterans and local communities, using a quasi-experimental design with two groups being included.

Research Questions and Hypothesis

Taking into consideration the evaluation questions created in the previous memo, the focus of this project is to check how the program changes veterans’ attitudes, to define how co-curricular activities may improve veterans’ performance, and to investigate the level of veteran satisfaction with services. Therefore, it is expected to involve two types of participants. On the one hand, veterans should share their opinions and attitudes towards the services and options offered. On the other hand, communication with the representatives of the ASAP team can help to identify the level of veterans’ performance in terms of the available resources.

In this project, it is expected to develop multiple hypotheses to underline the role of each variable:

- The presence of new stakeholders aims at promoting an increased literacy level of veterans and their satisfaction with innovative ideas and sources of information;

- New training activities within the frames of the chosen Armed Services Arts Partnership program introduce good opportunities for veterans to develop their skills and achieve a higher literacy level compared to those who do not participate in the program;

- The application of new social media sources provides student veterans with an opportunity to learn more, increase their level of knowledge, and be satisfied with the options available to them.

Research Design

The outcome evaluation will be based on a quasi-experimental design where a group comparison will be developed with an intention to identify the worth of the intervention offered to participants. According to Langbehn (2012), quasi-experiment is usually used to evaluate the impact of a program on several indicators. There are two main peculiarities of this research design. First, it should include pretest and posttest comparisons (Chen, 2015).

It means that the opinions of veterans should be gathered two times: before they join the ASAP program and experience changes and after the program is introduced to them. Another important characteristic of the quasi-experiment is the selection of groups for comparison (Langbein, 2012). In this case, it is possible to communicate with veterans who are going to join the program and experience changes in their lives and choose several veterans who do not find it necessary to be a part of the ASAP program and focus on self-development.

Quasi-experimental research design has several types depending on the number of groups and expected outcomes. In this case, a pre-and-post comparison group design will be followed. It is characterized by a high level of internal validity and simplicity in its performance (Langbein, 2012). The chosen design does not have a random component. A sampling includes definite people who meet certain inclusion criteria (as an opportunity to reduce an endogeneity bias). As a result, no every member of the population can have an equal chance to be a part of the investigation, but the selection of participants is supported with a clear reason, potential benefits, and expected outcomes.

Evaluation Outcomes

The introduction of new training activities, stakeholders, and social media resources should promote the creation of significant outcomes during the program evaluation. The distinctive feature of the ASAP program and associated changes is the possibility to promote personal and professional development and involve both veterans and ASAP workers in various co-curricular activities. For example, it is expected that the level of satisfaction among veterans with the services offered can be considerably increased.

Consequentially, military families may be easily integrated into civil activities and understand the demands of the world around them. Another important benefit, as well as an outcome, includes the increase of literate veterans with abilities to express their feelings, learn art, gain community respect, and develop personal responsibilities (Armed Services Arts Partnership, n.d.). In general, because of various activities and options, the quality of veteran life has to be improved, promoting their happiness, enthusiasm, and fulfillment.

Evaluation Indicators

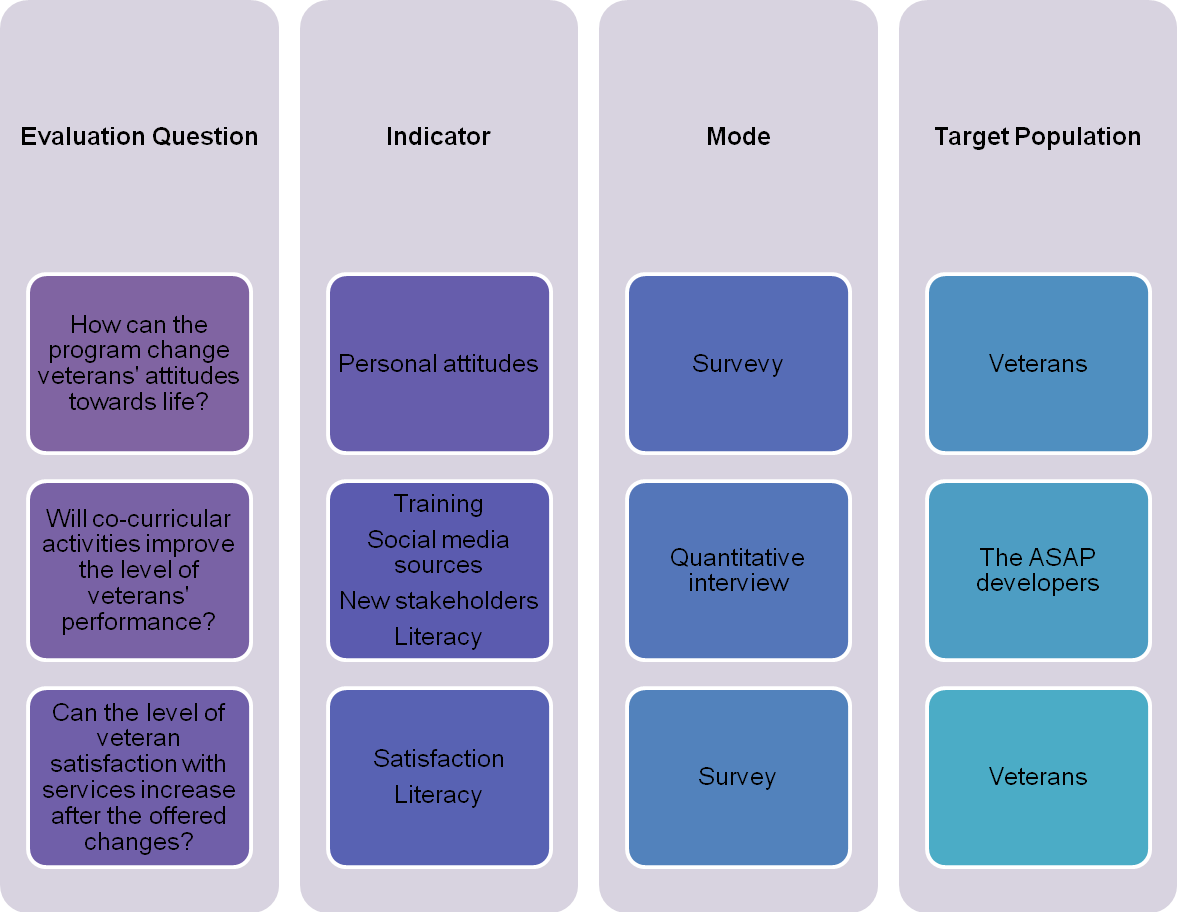

This program evaluation is introduced in the form of an analysis that is based on several indicators that promote updates. The key indicators show how to measure the effects of the intervention that consists of changes in training activities, social media usage, and the participation of new stakeholders (e.g., new organizations, museums, workshops, and celebrities). In addition, special attention should be paid to change in attitudes of veterans towards life after military services and the necessity to develop social relations developed in their communities.

It is also possible to evaluate the level of literacy among veterans who agree to participate in the ASAP program and who use personal skills and opportunities to continue their integration in civil life. Finally, changes in the level of veteran satisfaction have to be estimated. It is necessary to identify the number of veterans who are very satisfied with new options and who want new modifications being offered to them. Therefore, in general, there are three independent variables of the intervention under discussion: training, social media, and stakeholders. Dependent variables that prove the effectiveness of the intervention include satisfaction level, attitudes towards life, and literacy.

Data Collection

In this section of the report, the purpose is to describe the main features of a data collection plan. In the beginning, time frames have to be established to get an idea of how long this evaluation can last. The second step is the identification of target participants and the rationale for this choice. The next task is to examine the collection protocol and explain the modes of data collection. In the end, a chart with the necessary data in regard to the three-evaluation question will be developed to approve the already stated research hypothesis.

In terms of measuring the chosen indicators, it is planned to collect data several times. First, the pre-implementation data collection should be pointed out with its length of one month. It is expected to communicate with local veterans who are the members of the ASAP program and who have never participated in similar practices in order to compare their levels of knowledge and literacy. Veterans will be divided into the experiment (those who join the ASAP program) and control (those who do not joint and are not aware of the ASAP program) groups and invited to answer the questions offered by a researcher.

Then, the implementation itself has to last for the next three months, including direct communication with the ASAP team that is based on an interview with a list of close-ended questions. This stage is necessary for the project as it helps to explain the essence of the intervention and the worth of curricular activities, as well as to recognize how the developers of the ASAP program estimate veterans’ knowledge before and after the intervention. The final stage of data collection will be post-implementation and include the participation of veterans who agree to participate in the first stage. A survey will be offered to every participant, with several questions being similar to those from the pre-implementation questionnaire.

To succeed in this program evaluation, it is expected to collaborate with two groups of people. On the one hand, the developers of the ASAP program can share their opinions about the latest changes in their activities, the number of veteran students, and the improvements that can be recommended. They focus on the overall development of the program, its benefits for veterans and communities, and possible costs that cannot be neglected. Communication with this group is a good chance to learn what ordinary people know about veterans’ needs and how they develop attitudes towards military services and associated outcomes. In addition, their answers can help to understand what services are offered to people and what outcomes they want to achieve.

On the other hand, participants have to be veterans or members of military families who experienced change, trauma, or loss during their services but want to reintegrate into civilian life (Armed Services Arts Partnership, n.d.). It is expected to choose veterans who want to participate in the ASAP program, and those who do not, hereby, will be divided into two groups where one receives the intervention, and the other does not. Still, both groups have to take pre-tests and post-test in order to measure the differences in a certain period of time (the next three months).

Every design requires a specific data collection mode and instrument. This quantitative research has to be effective enough to gather information from different people and focus on different aspects of co-curriculum activities that can be offered to veterans today. There are three evaluation questions that have to be answered, and three modes should be offered with their specific strategies and requirements. Collection (intervention) protocol is created to identify the details of a procedure and the components of the work to be made (Chen, 2015).

The first evaluation question is, “How can the program change veterans’ attitudes towards life?” Target participants for this data collection part are veterans from experiment and control groups with their opinions about life, military services, and the differences between civilian and military living conditions. Veterans from the program have to introduce information before and after their participation in the program, and veterans who do not participate in the intervention should give their answers using their recent experience and lifelong changes.

Regarding the fact that different people can choose various time options, it is planned to gather pre-intervention information for one month and post-intervention information one month after a three-month implementation phase. The collection protocol includes the necessity for veterans to give informed consent to be involved in a program and/or its evaluation (Langbein, 2012). An experimental group has to be informed that the program may change their behaviors and attitudes towards different things.

A control group should find time to answer several questions at different periods of time. This group of people is not aware of the intervention and the essence of the ASAP program with its co-curricular activities and options. Therefore, it is important to demonstrate respect and patient to these veterans as they may be less informed or disoriented. No prejudice or subjective attitudes can be developed in relation to participants.

A questionnaire as a part of a survey is a mode of data collection that is chosen for this stage of program evaluation. This method makes it possible to gather information from different people in a short period of time, using the already prepared questions and possible answers. A list of questions will be sent to 100 veterans via e-mail. Fifty veterans have to join the ASAP program within the next month, and another fifty people should continue their ordinary lives without a direct impact of this program.

The main rationale for this choice is the possibility to have two groups of people of a similar age and with the same experience and compare their attitudes to life, their interests, and abilities in regard to the current living conditions and changes. The participants are obliged to return a questionnaire within the next two weeks after they receive it. They can also ask for a printed version in case they feel uncomfortable using online services.

The second evaluation question is, “Will co-curricular activities like new training approaches, social media use, or the participation of new stakeholders improve the level of veterans’ performance?”. To answer this question, the employees of the ASAP program are used as target participants. As well as veterans, they have to give their written permission to participate in this evaluation voluntarily. Informed consent has to be presented, and all aspects of data collections should be explained. The answers given by ten people should introduce enough information to understand how various activities may determine veterans’ performance. The peculiar feature of this type of communication is to gather clear and evident facts but not personal opinions or suggestions.

This part of a data collection plan includes close-ended questions within a quantitative interview. An intensive interview mode involves twenty individuals who answer the questions posed by an evaluator (Chen, 2015). Cooperation with program stakeholders creates an opportunity to identify the main co-curricular activities and changes that may be offered within the frames of ASAP. Interviews with ASAP developers should happen during the program implementation. One or two weeks during the next three months after the data collection from the first group of people is defined as appropriate.

The third evaluation question is, “Can the level of veteran satisfaction with services increase after the offered changes?”. Veterans are again target participants of this data collection stage. It is expected to connect the same veterans who participate in the pre-intervention questionnaire and offer a new survey, using the same questions, and asking several new clarifications. This mode of data collection is frequently used by researchers and evaluators as it gives reliable, quantitative information on the chosen issue from many people in a short period of time (Langbein, 2012).

The same conditions are offered to veterans: they can give their answers online or ask an evaluator to provide them with a printed version of questions. This data collection part should occur after the program is taken by the participants, meaning that new answers are given in three months after the last communication.

All these strategies and data collection modes help to answer evaluation questions and get a clear idea of how to continue working on this program evaluation. In the chart below, there is a guide on how to address each question and recognize what kind of information can be gathered. The main characteristics of evaluation design and a data collection plan are introduced in a brief way.

Concluding Remarks

In total, the choice of evaluation design and the development of a data collection plan is a good opportunity to improve an understanding of program evaluation, its need, and possible outcomes. The main feature of this project is that program evaluation is based on the participation of two groups of people. ASAP developers know the program in its best way and can determine the role of every veteran in society. Veterans are the direct recipients of knowledge and activities with the help of which they can change their lives, learn the differences between military and civilian environments, and be ready for reintegration into local communications and communication with different people. Sometimes, it is now an easy task to take a new step and forget all that loss and challenges. Quantitative interviews and questionnaires should help to indicate that difference and clarify the worth of the program.

References

Armed Services Arts Partnership. (n.d.). About ASAP. Web.

Chen, H. T. (2015). Practical program evaluation (2nd ed.). Thousand Oaks, CA: SAGE.

Langbein, L. (2012). Public program evaluation: A statistical guide (2nd ed.). Armonk, NY: ME Sharpe.