Introduction

There has been an increase in the use of the Unified Modeling Language in many contexts; it is used in application domain, methodology, and platform. For this reason, there has been more concern for customized consistency. There has been little support for customizations that are specific safe for the rules which are well formed in OCL. More specifically, the developer has had no support when it comes to consistency conditions for the behavioral consistency. There are conditions that should be met if UML is going to be used in modeling. Some of the conditions include factors like method and tool support; both of these are at the syntactic and the semantic level.

There are ways of developing a method that is used for managing consistency. One of the ways of developing and defining consistency is by having partial translations of models into a formal method that provides a language and tool support so that they help in the formulation and verification of semantic conditions of consistency. In this approach, the conditions are specified by means of transformations in graphical method and the meta model method (Van, Letier, and Darimont 1998, p. 122). There are various methods which the application domain may be defined; this will depend on the management that is required to be made then.

Related work

There have been work that have been done in the past proposing the required processes; there have been formal and informal processes and all these have been aimed at enabling developers to come up with good software in the development process. Consistency management in the whole process has been a point of concern. The patterns that have been designed have undergone a process where the variants that are to be used will be varied so that the best designs are arrived at.

These activities and further research is helping to come up with the best designs. There are two objectives that have not been addressed that in these researches include active guidance that should have been used by the designers to come up with high-quality software. The other issue was to achieve active guidance during the design so that the intended requirements are followed. This ensures high software development standards.

For these objectives to be achieved, the main issue that is to be solved is to have efficient management of consistency which goes according to some rules of consistency. One thing that has been agreed upon is not to enforce the rules of consistency as standards are to be followed. There have been proposals to have controls on these when properties are checked. The method of consistency management that I propose in this paper follows a similar model.

One difference that is evident in these approaches is the fact that the proposals from the other researchers insists that the trigger events should be monitored while my approach follows a proactive process specification. The second difference that is common n these models is in their reaction to consistency failures between the two systems; theirs allows the systems to react top the system changes while mine allows any action in which the process programmer to assign whether these actions are assigned by human beings or they are assigned automatically.

There are other systems and proposals which have been made where the consistency rules are the ones which give the guidance in the whole process and that the designers follow these while they continue to work. One of the examples of these systems is Argo/UML which is a tool that is used for editing models; the way that this model works is such that those who have criticisms about the system will look into the system and ensure that the consistency rules that have been defined are followed. If in case these rules are not followed, the user will be assigned an item in their to-do lists that will remind them of what they are supposed to do.

With this, consistency rules are followed at all costs. One of the issues that have not been taken care of in all these is the fact that there is no automatic control over which critics are active at which times. In the end, there will be many items tat are placed in the user’s to-do list causing the model to be inconsistent in every stage of it. Most of the errors are errors which are of omission in nature where the users have no control previously to control it. Although this is the case, there is a provision where the critics can be turned off and on.

Argo/UML is written using the Java programming language where it has been found to provide flexibility. There are other people which have proposed other languages so that they, like Java, can be used to specify consistency rules. The language that is accepted as the standard constraints for UML models is Object Constraint Language (OCL); it allows identification. For this to be effective it uses path selection operations and the logic that is used in this is first-logic. It has been used when defining the semantics of UML. Due to the fact that OCL uses first-order logic, transitive closure is not available; it therefore reduces the usefulness that could have been got from the use of consistency rules.

There is yet another model, clinkit has the same features like Argo/UML in the sense that in allows the identification of sets of elements; the only difference here is the fact that it works on documents which are encoded in XML and also uses XPath expressions for selecting the path. Although it uses first-order logic, it adds transitive closure. Given the fact that all tools that use UML, including Argo/UML will give output using XMI, there will be standard encoding that uses XML. Xlinkt can be used to specify rules that will be used for consistency.

My approach

One of my approaches is to design software in a formal program. For effective modeling management, I will use Little-JIL; this defines processes in terms of steps that are supposed to be performed. In the process of checking consistency, aui attach checks in every step. The steps that will be checked after prerequisites have been checked. If any of the artifacts fail the checks for consistency, exceptions will be thrown for which a program will be developed that will respond to this.

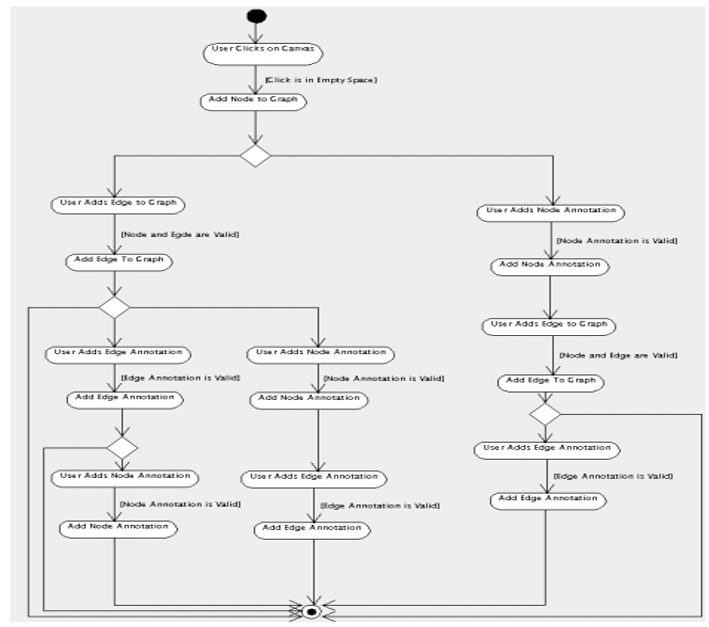

There are experiments which have been made so that the programmers can develop quality programs; the environment has been used so that it acts as a guide to the designer top come up with good designs. There is an example which has been developed where the designer aims at developing a graph editor which is validated (Krishna, Vilkomir, and Ghose 2009, p.233). the graph that is developed enables the users to create the nodes that will be used in the system. The graph editor that is developed will be in a position to enable users to create nodes, and add annotations to them; the same users will be able to connect the nodes with edges and these edges can also have annotations. In all the process, the editor should be in a position to identify those nodes which are not consistent with some rules and be able to get rid of them so that there is no creation of graphs which are invalid to the model.

The project starts by the creation of some use cases. These use cases have functional requirements which are described in the diagram that follow.

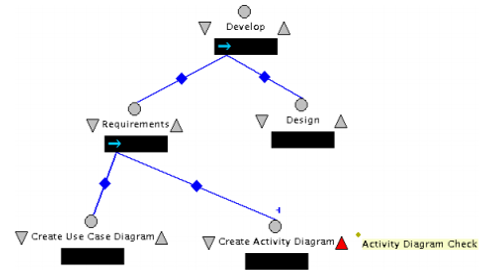

Example of Little-JIL Process Program

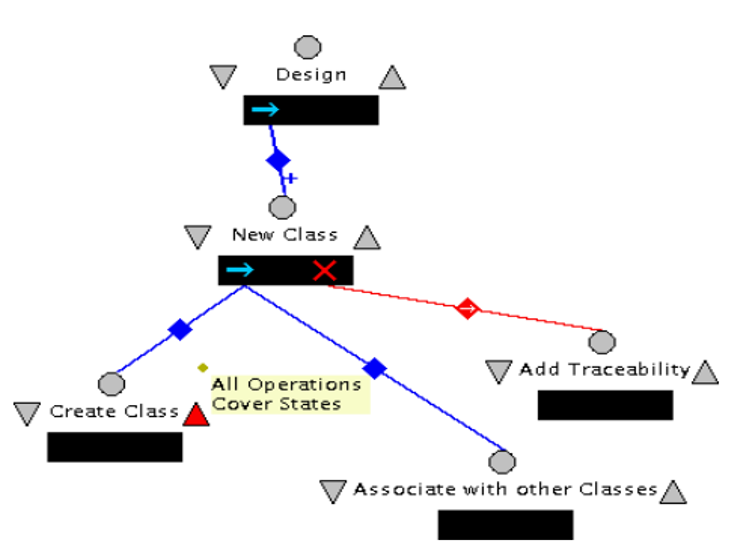

In the first diagram, the first part of the process is given an explanation. In the diagrams, the process is nothing but a collection of steps which are sequenced from left to right. In the diagram, the + signs indicate that the process will be repeated more than once. This will depend on the discretion of the person in the process (Hoa, and Winikoff 2010, p.342). A triangle which is darkened indicates that there is a step which must be repeated in the process after the step is completed. In the diagrams in is clear that there is intra-diagram checks in the system that is being checked for consistency. In the paper, the rest of it, I will delve more on the inter-diagram consistency checks.

In another scenario, there is a description of how a use case may be created using the description that has been elaborated so far. In this description, it shows how a node can be added to the graph which is exists already.

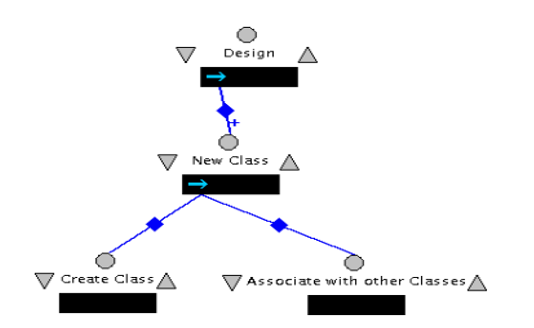

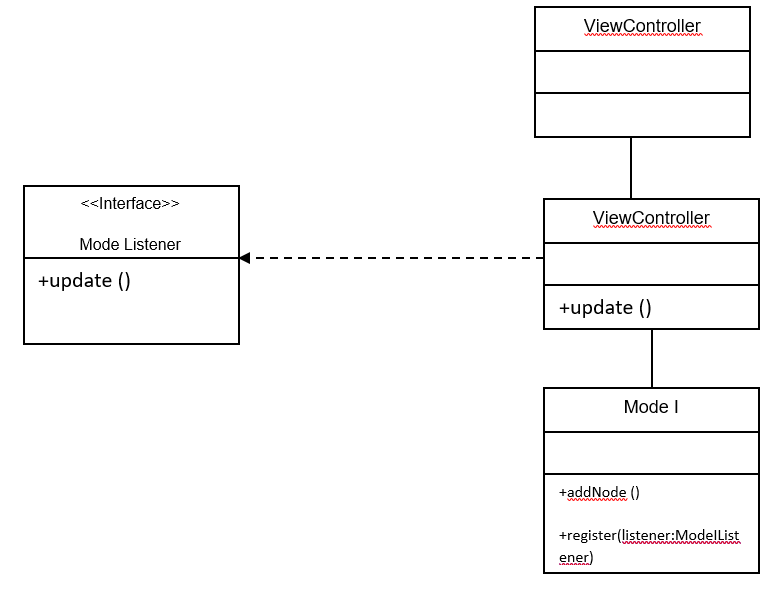

There is then an elaboration of the design from the process. It is in this stage that the designer creates a class diagram that will be used for software process system. In this case, it is possible to perform consistency checks with the aim of producing high quality design products. In the diagram that follow efficient consistency management can be achieved by using the diagram, however incomplete it might seem.

Consistency and traceability

One of the factors that will determine whether a design is good or bad will include the tracing information of the design. A good design should enable the designer to trace the parent objects rightfully to the start of the object. With this, it will make it more consistent and more manageable to the designer. That is the reason why the traceability of the information should be included when the system is being designed. This has helped a lot in making sure that the object oriented systems are developed rightfully in the system. The information that is entered in the model contributes to the success of getting the root information.

One of the ways of accomplishing traceability information would include the process where we were checking whether consistency rules were followed in the development stage of the whole process. An example is that of creating a class. Before a class is considered to be complete, there must be assurance that all the methods that were referred in the development stage are followed. This is evident in the Create Class step. This is one of the best ways, at least according to my research, of checking whether consistency is adhered to. Still referring to the creating class step, we can then check whether each method of the class in question has some action which is associated with it in the model. This is checked through the implements relationship. This rule can be easily represented by:

us∀o ∈ operations :

∃s ∈ actionStates :

o →implements s

It is evident from the rule that the classes that are created following on the rule will have satisfied all the elements in the requirements specifications. In the diagram above, if the method Model has the implements dependency with the Add Node to Graph, then the addNode will satisfy the consistency rule that was meant to be satisfied by it right from rule 1; the funny thing is that the Register method does not satisfy this. For this exception to be captured, we will require that the designer captures this by adding this traceability information (Easterbrook, and Nuseibeh 1995, p.345). With this all the constraints will have been captured here.

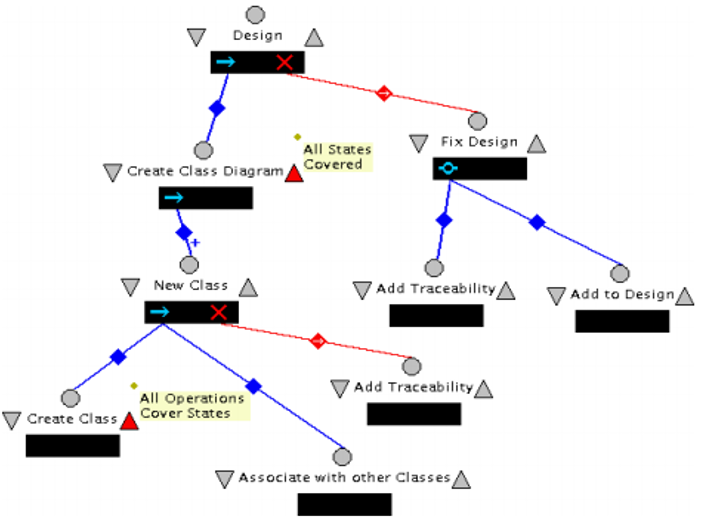

In diagram 5, there are revisions that have been made so that it includes the change of the Design step to take care of the checks; it checks whether the consistency rules have been followed in the design process. It also includes whether the checks have resulted in a failure within the system. The step of Add Traceability is a step where it takes care of the exception which could be realized in the step of Operations Cover States step; this is the step that is a post-requisite of Create Class

The arrow which is indicated pointing at the edge towards the Add Traceability indicates that after the traceability information has been added, the designer is then free to move to the next step. In this case, the next step would be to associate with other classes (Spanoudakis, and Zisman 2001, p.244). The design that is proposed to ensure that consistency management is adhered to is also to make sure that all the operations have been covered and that the model is complete at any given time. All the portions of the requirements are to be satisfied in the course of the design process. In short what this means is that all the requirements are covered in the process, of course including the fact that all operations respond to some portion of the requirements. It is taken care of in the following rule:

∀s ∈ actionStates :

∃o ∈ operations :

o →implements s

There is one thing that should be expected here, that all the rules will not be covered here. This is because all the classes have not been declared yet. It is not until all the classes have been declared that all the requirements will be covered. A further revision on this will make sure that the classes are followed and adhered to. In this revision, all the classes will have to be declared and specified so that it will make the work to be complete (Easterbrook, and Nuseibeh 1996, p. 34). It is worth noting here that there is extra step that have been taken which will be used to take care of exceptions that are to be met in the design.

This extra step is what has been referred to as the Create Class diagram. It is used for conformance checking in the rule of consistency. By taking this extra step, we make sure that the designer is through with the design process and that we will not have to be having all the checks that are experienced in the development stage. As in the step that preceded this step, the exceptions are handled by throwing the error to the rest of the process. It will be handled in the rest of the program.

In this case, the designer has the discretion to choose as to whether to add traceability information and adding to the design so that all the requirements is adhered to.

Experience and future work

To this point, the paper has been concerned with feasibility issues. In short, we have been attempting to answer the question of whether it is possible to control the constraints of the application by use of a program which is formalized, and the effect, if any, that consistency checking have on the design process.

Feasibility

The Juliette process program is the best in getting to know and learn how feasibility cam be achieved in requirements modeling. We are the using the properties of the Little-JIL which has specifications every step of the way. In diagram 1, the step of Requirements would have a requirement that it needed an engineer in this position. The step of Design would need a designer in this step. When the program is running, Juliette would read the instances that are needed and bind them to the program. Then the step that would follow is to assign the steps in question to the instances using the sequence that has been specified in the program.

Application: Java-Commerce-Client

The application of the consistency rules in the modeling and programming industry has led to the development of apt applications and languages that are good for the current programming environments. Client server transactions are applications which make use of the web applications. The current trends in technology have resulted in applications where they allow a user in a remote place to access a program or application in another place and make useful transaction. The browsers get contact with the server through protocols. The rules of consistency helped improve the communication of the server and the clients, browsers.

These protocols are available according to the transaction being processed. This then allows users to use Hypertext Transfer Protocol (HTTP), File Transfer Protocol (ftp), Telnet, and email from one platform.

With the advent of Web 2.0 in today’s world, more and more applications are being deployed on the Internet to allow for client server transactions to take place. Most of these applications are developed following the rules of consistency. The rules have been developed to advanced levels so that robust applications are now possible. More of today’s computer systems are web based, meaning that many organizations are shifting to paperless offices, thank improvement in client/server transactions. The commerce industry has benefited a lot from this trend because of the transactions nowadays are being done on the Internet.

It is therefore imperative for these transactions to be as secure as possible. Web based transactions are prone to attacks every now and then. There are many frameworks which have been developed to combat with security issues on the Internet. This paper explores at the features that make Java Commerce Client a secure framework that addresses the issue of security over the Internet. This framework has many features that make client/server architecture transactions the preferred method of doing transactions on the Internet. Compared to other frameworks on the Internet that support client/server transactions, Java Commerce Client offer more advantages. More is being added to it to improve this functionality and to add more content.

Features of Java Commerce Client

Java Commerce Client provides a framework where large enterprises are able to share a common platform for communication. The platform has been developed using the rules of modeling bearing in mind consistency rules. This is left to be handled by the framework. This framework arranges code which are specific to the application in modules referred to as cassettes. These cassettes are then blended and mixed together to come up with a mix which will be used in an electronic transaction. This framework is able to work in applications designed for large and small organizations in designing accounting software, tax calculation software, and finance software. All these applications require the implementation of high level of security.

The structure of the Java commerce client has been built on the rules of data modeling. The cassette is built on the Java archive (JAR). There are three commonly used Beans which include an operation bean, protocol bean and instrument bean.

Some of the features that make Java Commerce Client appealing are discussed in the paragraphs that follow. The main reason for minimized sizes is that much of the functionality is provided externally. Software systems have many artifacts which must in some way interrelate with each other. In these artifacts, there must be a way in which the code and the way they are tested are traceable to the design. In all specified in the, the design must meet the requirements and must also be in a position to be executed. For a model to be considered as effective it must portray well related artifacts in it and these models should be in a position to offer rich information on the constraints in which they portray.

This paper will therefore look at the relationships that exist hereof so that the software developers will be in a position to develop better software in the future. In particular interest, the findings in this research will help the developers to see good methodologies that will help them to develop their own robust software. In consistency management, there is need to ensure that the designs follow what is stipulated in the in the requirements.

- Java Applet framework: this is the framework that provides the basic functionalities of the Java applets. Built on Juliet program, it has a smooth way of checking for consistency in the design process.

- Java Commerce Framework: it is a platform that is used to provide secure monetary transactions over the Internet. It has helped in the coming up of systems that are resistant to cyber attacks.

- Java Enterprise Framework: This is the framework that makes distributed systems work in the system.

- Java Server Framework: this is the framework that is used to develop good server communication with the client. There should be a framework tat supports communication of the servers and the clients. It also has functionalities that support servlets.

- Java Security Framework: It provides support for secure online processing.

A major evolution of JCC language was the introduction of a new task model which was based on protected objects. It allows for a more efficient solution for the enabling of shared data. JCC also has Asynchronous Transfer of Control which forms a powerful construct which enables it to receive events without the need of polling or waiting cycles.

Another feature which makes JCC strong is the requirement for users to validate compilation. This is implemented in the sense that a compiler targeting Real-time systems must conform also to the real-time systems annex and the systems programming annex.

Java RPC is another application area where consistency modeling is evident. Remote Method Invocation is a technique that was introduced in JDK 1.1; it has uplifted the programming using Java language to a notch higher due to the fact that a Java programmer can now program in the world of distributed object computing. This new technology has evolved a great deal with JDK 2.0. One of the primary goals of RMI design is so that the designers can program in Java in distributed computing environments just like they do in non-distributed computing.

Java programming language has placed itself strategically for the deployment of Web 2.0 applications. Any Web application will be composed of the server side and that of the client side. Java has a good server which is fully developed to handle transactions sent to it. Java has the Apache Tomcat which is a good server for processing Web applications. There are developments from Sun Microsystems which are aimed at making development of Web applications using Java an easy task for web developers. Sun Microsystems have released a package used for developing web applications in Java called Java Web Services Developers Pack (Java WSDP).

It has the necessary tools for the deployment and configuration of Web services using Java platform. Most of the tools for Web applications in Java have undergone all the review requirements of any Web application. With the rush for online applications, Java language has put in place measures to counter attacks from unsuspecting application which are aimed at online applications. With Web 2.0 applications promising to bring many advantages to businesses operating worldwide and those operating within a small geographical area alike, IT professionals are very alert on the havoc these Web applications can cause when it comes to security. Java Web application services are here to stay.

Comparison of JCC and JAVA

Since Java is a language which is popular with most Web programmers, it has gained much popularity in this era of the Internet. Java has many programmers which makes it a promising language for the future. It is undoubtedly the language that will be popular with Web programmer, which are predicted to increase in future.

JCC’s main problem still remains largely with its complexity. JCC has a strong typing model which is difficult to learn and is boring and difficult for most programmers. Most of these programmers prefer Java and C++ because of their ease of use. The initial impression of JCC made it lose its popularity because it has compiler inefficiency and complexity. This prevented it from spreading too many programmers.

JCC brings with it advantages over Java like the separation of logical interface from implementation, and its strong compile-time type checking, higher-level synchronization constructs. Java followed JCC in their coming up of real-time support extension.

JCC offers other advantages like Object Oriented programming capability, support of concurrency, strong type checking and interfacing of hardware. These advantages make the whole difference in the development of Real-Time applications (Barnes, & Gilbert, 2003).

Currently, Java has gained a lot of popularity from the software engineering community. It was initially developed to be used in embedded systems. Currently, it is one of the favorite languages for Web programming.

References

Easterbrook, S, & Nuseibeh, B 1996, ‘Using ViewPoints for Inconsistency Management’, Software Engineering Journal, Vol. 11, No 1. Pp. 34-37.

Easterbrook, S, & Nuseibeh, B 1995, ‘Managing Inconsistencies in an Evolving Specification’, IEEE International Symposium on Requirements Engineering, Vol. 2, No. 4, pp. 48-55.

Hoa, D, & Michael, W 2010, ‘Supporting change propagation in UML models’, The 26th IEEE International Conference on Software Maintenance, Vol. 24, No. 2, pp. 43-45.

Krishna, A, Vilkomir, A & Ghose, A 2009, ‘Consistency preserving co-evolution of formal specifications and agent-oriented conceptual models’, Information and Software Technology, Vol. 52, No. 6, pp. 478-496.

Spanoudakis, G, & A, Zisman, A 2001, ‘Inconsistency management in software engineering: Survey and open research issues’, World Scientific, pp. 23-25.

Van, A, Letier, E & Darimont, R 1998, ‘Managing conflicts in goal-driven requirementsengineering’, IEEE Transactions on Software Engineering’, Vol. 3, No. 2, pp. 23-24.