Introduction

The objective of this chapter is to describe the methodology employed by this study to collect and analyze data. Reyes (2004) recommends Watkins’ (1994) research methodology, which involves seven steps:

novelty of the problem; investigator’s interest in the problem; practical value of the research to the investigator; worker’s special qualification; availability of the data; cost of investigation and; time required for the investigation. (p. 3)

While considering all these aspects, one of the most important issues in conducting the social research is to find a way of getting the focus on the different aspects like the problem statement, conceptualizing the theory and choosing the research design.

Denzin and Lincoln (1998) states that a researcher is independent to engage any research approach as long as the method engaged enables him or her to complete the research and achieve its objectives. However, it is essential that a researcher should consider the nature of the research inquiry and the variables that have an impact on the research process. The researcher has to evaluate the appropriateness of the methodology as to its ability to find plausible answers to the research questions within the broad context of the nature and scope of the research issue.

For the current research on media financial discourse reference to the collapse of Lehman Brothers, considering the research issue under study, a qualitative method of discourse analysis is the main method employed by this study. This chapter presents a description of the research method and discusses the salience, merits and demerits of the method adopted. The chapter also presents a theoretical framework adopted in this study.

Research Method and Design

As noted from the preceding chapters, the main purpose of this study is to examine the financial crisis of 2008-2010 on public discourse with regard to the collapse of Lehman Brothers. The study is qualitative in nature and largely involved the use of secondary data to provide the information needed to analyze public discourse on the collapse of Lehman Brothers. Polkinghorne (2005) states that qualitative research is a research method that extensively rely on language data.

According to Shrank (2002), qualitative research is “a form of systematic empirical inquiry into meaning” (p. 5). Qualitative research thus follows a “planned, ordered and public” approach grounded in the world of experience to understand others’ perception of their experiences (Geothals, Sorenson & MacGregor, 2004). This means that qualitative researchers “study things in their natural settings, attempting to make sense of, or interpret, phenomena in terms of the meanings people bring to them” (Denzin & Lincoln, 2000, p. 3). As Firestone (1987) observes, qualitative research endeavors to understand reality from the actor’s point of view.

According to Marshall and Rossman (1995), the qualitative research is based on collection of data from different sources and the data already collected forms the basis for reporting the findings of the study and making recommendations. The study involved a critical review of newspaper articles on the case study as well as a critical review of scholarly articles on the 2008-2010 financial crisis to provide background information on the topic.

While newspaper articles provided primary data for the study, secondary formed the basis for literature review. The use of secondary data in social research is in harmony with a number of researchers who emphasized the need for thorough literature review as the first step in virtually all research studies (Neuman, 2003; Gratton & Jones, 2003). Cohen (1996) stated that the use of primary data in any research study accrues the researcher several advantages including: “collection of spontaneous data; data collected is a true reflection of the speakers’ views and; the event being communicated has real-world experiences” (p. 391-2). Newspaper texts are firsthand expressions of the writers’ linguistic ability and provided an appropriate data for qualitative analysis of the case.

Many researchers have recognized the benefits of qualitative research in social inquiry (Conger, 1998; Bryman et al., 1984). Reading in the scripts of previous authors, Geothals, Sorenson & MacGregor (2004) summarized the advantages of qualitative research methodology as follows:

flexibility to include unexpected ideas and examine processes effectively; sensitivity to contextual factors; ability to study symbolic dimensions and social meaning and; increased opportunities to develop empirically supported new ideas and theories, for longitudinal and in-depth exploration of phenomena, and for more relevance and interest for practitioners. (p. 2)

Similarly (Matveev, 2002) summarized the strengths of qualitative research to include:

ability to obtaining a realistic feel of the world that lacks in quantitative research; flexible approach to data collection and analysis; presentation of a holistic view of the case under investigation; interactive approach to research and; descriptive capability of data. (par. 14)

Unlike quantitative research that mainly undertakes surveys and generalizes finding to the whole population, qualitative research studies subjects holistically to understand the interaction between their experiences and perspectives. Besides qualitative inquiry ensures that there is a close distance between the researcher and the case under study hence information generated is often rich, naturalistic, and context-bounded (Bullock, Little & Millham, 1992). In this regard, Borg and Gall (1989) emphasizes that the aim of qualitative research is to generate unique knowledge with regard to specific context or individual. Given these benefits, qualitative research remained the most appropriate paradigm in this study.

Despite its many benefits, qualitative research paradigm also has some weaknesses, which Matveev (2002) summarized as:

Possibility of deviation from the original research objective; different researchers are likely to arrive at different conclusions using the same information depending on their personal characteristics; inability to explore causal relationship between phenomena; possibility of non-consistent conclusions due to difficulties in explaining the difference between quality and quantity of information obtained from different respondents; explanation of targeted information requires high level of experience and; lack of consistency and reliability given the flexible nature of qualitative research. (par. 15)

Matveev (2002) thus advised that qualitative research should be preferred only if it is the most appropriate paradigm to explore the phenomenon under study.

Undertaking an analysis of the public discourse in the 2008-2010 financial crisis requires a methodology that allows for flexibility and in-depth exploration of the topic hence the rationale for qualitative approach. The topic under study is qualitative in nature mainly guided by the “what” and “how” type of questions as it examines the effects of the 2008-2010 financial crisis on public discourse in the United States hence the rationale for qualitative research as such type of questions are likely to generate qualitative responses. The targeted data for this study is qualitative and thus undertaking a quantitative analysis of the same is impossible.

Having discussed the research methodology of this study, the focus now turns to the research design employed in this study. Creswell (1998) (as cited in Polkinghorne, 2005) proposed different designs for qualitative research including phenomenology, ethnography, grounded theory, case study, and biography (p. 137). This study used the case study research design. While this study intended to examine the effects of the 2008-2010 financial crisis on public discourse in the United States, the study took a case study design approach and only concentrated on the collapse of Lehman Brothers.

Tellis (1997) recommends case study design for studies that require a holistic, in-depth investigation of a phenomenon. The topic under study required an in-depth analysis to be able to analyze the immense literature on this topic hence the appropriateness of case study design. Further, case studies are based on real world phenomenon hence easy to investigate compared to research designs based on assumed models. A case study design is thus the most appropriate in studying the effects of the recent financial crisis on public discourse in the United States. Besides, the topic under study generated immense literature that would be cumbersome to analyze if the topic was addressed as a whole. In this regard Feagin et al. (1991) observes that:

The case study offers the opportunity to study these social phenomena at a relatively small price, for it requires one person, or at most a handful of people, to perform the necessary observations and interpretation of data, compared with the massive organizational machinery generally required by random sample surveys and population censuses. (p. 2).

Narrowing down the study to the collapse of Lehman Brother ensured that the researcher works with manageable volume of literature. Working with a pre-defined sample also minimized chances of sampling bias that might occur if the researcher is to pick from a variety of cases.

Despite its strengths, qualitative case study designs also have several weaknesses. Guba and Lincoln (1981) argues that case study research is likely to face serious ethical problems since the researcher has control over the data collected and thus could only collect what he/she deems important in the case. Case study designs are also associated with limitations in terms of validity, generalizability, and reliability (Guba & Lincoln, 1981).

As Hamel (1993) observes, case studies have been criticized for its lack of rigor in data collection and analysis, and lack of representativeness (p. 23). The researcher was well aware of these limitations, but still found a case study design as the most appropriate in this study. The massive volume of information available under this topic could only be handled using a case study approach.

Using the above mentioned research method and design; the population for analysis in this study included all the news paper articles on the 2008-2010 financial discourse published in The New York Time and The Wall Street Journal during 2008 to 2010. However, the analysis only considered articles on the collapse of Lehman Brothers. As Tellis (1997) observed, case study research does not require any sampling since the researcher has to collect as much information as possible from the subjects in the case.

The researcher thus analyzed as much articles as could be found so long as they were within the sample frame to be able to examine the various linguistic-rhetorical features present in them. The choice of this sample frame was guided by the topic under study, which focuses on case study analysis of articles on the collapse of Lehman Brothers. The sample framework consisted of 324 articles from The New York Times and 441 articles from The Wall Street Journal during the three years of financial crisis.

Data Collection

The main purpose of data collection in any research study is to gather important information about a phenomenon that can guide in the explanation of its important features. Qualitative data collection seeks to gather information that can be used to explore issues and understand them including their influence on people’s way of thinking and their life in general. Different research paradigms utilize different data collection tools to achieve this purpose.

Quantitative research uses tools like surveys for data collection. Qualitative research, on the other hand, uses such data collection tools as participant observations, field interviews, archival records, and documents among others. Unlike quantitative data that is usually numerical, qualitative data avails itself in the form of written and spoken language hence requiring data collection tools that are able to capture such type of data. As Polkinghorne (2005) observed, language data are “interrelated words combined into sentences and sentences combined into discourses” (p. 138). In this regard, Boojihawon (2006) states that qualitative research can use any of the following data collection methods: “

- documents,

- archival records,

- interviews,

- direct observation,

- participant observation

- artifacts” (p. 72).

Either the researcher can use a single or a combination of these or other methods for data collection and the selection of data collection method shall rely on the nature of research proposed to be undertaken. In this study, the researcher used the single data, which are printed texts from newspapers to reply the research questions of the study.

The data collection methods for this research included information retrieval from archival records, and other documents for completing the research. The quality of data collected determines the validity and reliability of the research findings.

There are only few tenets, which define the mission of data collection in qualitative research. According to Polkinghorne (2005), the mission of qualitative data collection “is to provide evidence for the experience it is investigating” (p. 38). Each research study has to use a data collection method, which fits into the research methodology chosen by the researcher. The goals of both quantitative and qualitative research studies are to make the most of responses from the participants and to enhance the accuracy of the results to the maximum extent possible.

Taylor-Powell & Renner (2003) explain that qualitative data consists of words, expression and observation as compared to quantitative data that consists of numbers. Analysis of qualitative data requires creativity, discipline and systematic approach. They further illustrates that there is no single way to analyze the qualitative data; however, the basic approach is to use “content analysis”. Discourse analysis in this paper is to count and code of various rhetorical and linguistic features that occur throughout the selected articles in The New York Time and The Wall Street Journal during 2008 to 2010.

During the process of data collection, the study predominantly relied on a particular type of data: the texts in discussion of the collapse of Lehman Brothers that appeared in two leading U.S. newspapers over the period of 2008-2010. Specifically, these texts came from the U.S. newspapers- The New York Times and The Wall Street Journal. The New York Times is generally thought to be more liberal while The Wall Street Journal is viewed as more conservative. Therefore, writers’ political ideologies that reflected from their writings are also reviewed and analyzed.

The researcher relied on the help of a useful database tool- Factiva to aid the process of data collection. Owned by Dow Jones & Company, Factiva is a commercial research and information tool that aggregates content from both licensed and free sources, and provides organizations with search, alerting, dissemination and other information management capabilities. Factiva provides access to volumes of a variety of sources including newspapers, journals, magazines, photos, television and radio scripts among others (Dow Jones website, 2011). Using Factiva, individuals, companies, organizations are able to access over 28, 500 sources from 200 countries in 25 different languages (Dow Jones website, 2011).

The research settled a time range from January 1st, 2008 to December 31st, 2011. The researcher was able to generate volumes and volumes of newspaper articles on the 2008-2010 financial crisis. The search was then refined to focus on financial markets and banks and eventually narrowed to the case study, Lehman Brothers. To obtain data on the case study the researcher combined “Lehman Brothers” and “financial crisis” as key words search, and settled on newspaper articles in which these two key phrases appeared either in headline or in the first paragraph. By this method, the researcher achieved 324 articles from The New York Times and 441 articles from The Wall Street Journal during the three years of financial crisis.

Theoretical Framework and Data Analysis

Before discussing the data analysis approach used in this study, it is imperative to discuss the theoretical framework of this study to guide in the understanding of the data analysis process of the study.

Theoretical Framework

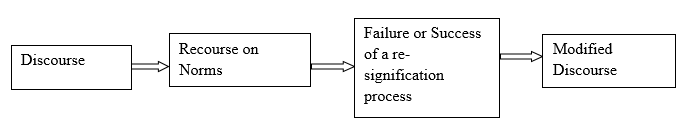

Discourses play a critical role in influencing people’s way of perceiving a phenomenon. A theoretical framework in discourse analysis must thus be able to address why and how discourses influence the society as well as provide guidelines for tracing possible changes in discourses regarding the same topic in the same society (Fuchs & Graf, 2010). Discourses are constantly modified to depict various changing aspects of the society through a process that Fuchs & Graf (2010) describes as re-signification as illustrated in fig. 1 below.

The theoretical framework presented in figure 1 postulate that re-signification process occurs when there is a successful activation of inter-subjectively shared norms (Fuchs & Graf, 2010). Fuchs & Graf (2010) also asserts that the success of re-signification process depends on both the speaker’s discursive power and the reception of the articulation (p. 5).

The theoretical framework of discourse analysis presented above borrows from Foucault’s theory of discourse. In Foucault’s view, discourses are intertwined in power and stretches over all facets of public life in a network format (Fuchs & Graf, 2010). In his theory Foucault argues that discourses “define the area of the true and through this exercise societal power” (as cited in Fuchs & Graf, 2010, p. 7).

Guided by Foucault’s theory of discourse, Keller (2005) conceptualized discourse as “practices, which systematically form objects of which they are talking” (translated by Fuchs & Graf, 2010, p.7). This theoretical statement formed the starting point of this study and considered discourse “as specific sets of statements as reality constituting moments” (Fuchs & Graf, 2010, p.7). The articulation of discourses is guided by principles and standards that Foucault referred to in his theory as norms. Norms influence how people act and express themselves as it determines what can be said in a society without fear of sanctions (Fuchs & Graf, 2010).

In this regard, recourse on norms triggers a set of values and ideas, based on well-established foundations of knowledge, present in public discourse (Fuchs & Graf, 2010). It is through recourse on norms on public discourse that potential changes in the meaning of discourses become possible hence the process of re-signification. The norms shaping public discourses are either dominant or marginal.

Dominant norms may persist over a long period of time, but may as well be discredited hence lose their value in shaping public discourse. Similarly, marginal discourse may only apply to a certain context and varnish with time, but become significant again when a context similar to the one they were used appears. For instance, financial crisis is a phenomenon that may appear once after decades. The marginal norms used in the previous discussion may as well resurface when another financial crisis appears, the same way some prominent norms used in the discussion of the previous crisis may become less prominent in the new financial crisis.

This constant change in norms has a direct influence on both the behaviour of the actor and the recipients hence the process of re-signification (Fuchs & Graf, 2010). The success or failure of the process of discourse re-signification is in turn influenced by the powers of institutional frames. Only the occupation that takes a powerful position of a discourse will be heard in the ‘discursive swarm’ (Fuchs & Graf, 2010). For instance, the public discursive swarm of the 2008-2010 financial crisis was dominated by the media due to their powerful discourse position. Therefore, the norms that shaped the discussion were mainly from the media society.

In summary, discourses are statements that are used by people to express their ideas about real world phenomenon. They are controlled by norms which change over time and thus presenting the possibility of modification of discourse through a process known as re-signification of discourse. The success or failure of the process of re-signification is influenced by the powerful positions of the actors in the discursive swarm.

Data Analysis

This stage of the analysis has taken a deliberately “broad sweep” of the data to try to get an overview of the most significant episodes and characteristics and to attempt to relate them about the impact of the financial crisis specifically the failure of Lehman Brothers on financial discourse in the U.S. Data analysis is a critical stage in any research study as it directly influences the interpretation and understanding of findings.

Researchers are thus advised to use the right techniques that are in matching with the research objective, methodology and design. The theoretical framework adopted by the researcher determined the appropriate data analysis tools. From its theoretical framework and objective, this study intended to carry out a discourse analysis of the 2008-2010 financial crisis in the U.S. with a focus on the collapse of the Lehman Brothers. The researcher thus found it appropriate to use discourse analysis technique for purposes of data analysis in this study. Foucault (1976) defined discourse as “the expression and configuration conditions of the society at the same time” (as cited in Fuchs & Graf, 2010, p. 7).

The theoretical framework of discourse offers a broad perspective for understanding the relationship between norms and discourse. As Fuchs & Graf (2010) asserted, understanding the implications of a discourse for policy and society in general call for an understanding of the norms activated in it. Norms also influence how discourses are articulated to express experiences regarding a phenomenon in the society. While discourse theory is broad and elaborative, its translation into discourse analysis requires a distinction between the theoretical framework and empirical analysis.

In this regard, Keller (2005) argues that: “discourse analysis is a master frame for the micro-oriented analysis of language in use, which is based on pragmatic linguistics and conversational analysis” (p. 2). Therefore, discourse analysis is differentiated from discourse theory in that while the theory provides the theoretical guideline for studying discourses, discourse analysis is more concerned with the scientific analysis of the linguistic components of spoken and written experiences.

This study borrowed from Jäger (2001) and utilized an analysis approach that critically evaluated the discourses in the recent financial crisis. Jäger (2001) provides a procedural framework for the analysis of discourses using the assumption that “structures that create discourse are detectable, changeable, and influence what is conceived as being true” (as cited in Fuchs & Graf, 2010, p. 9). Jäger thus provided five steps for discourse analysis namely:

- the analysis of the institutional frame of the discourse;

- examination of the text at the surface to evaluate the modalities of the statement;

- identification of linguistic-rhetorical means used in the text;

- explanation of content-ideological statements;

- integration of findings from different steps. (as cited in Fuchs & Graf, 2010, p. 10).

Applying Jäger’s approach however proved a bit complex in this study, a situation which forced the researcher to modify it in such a manner that was less complex and easy to work with. The researcher had to modify step two of Jäger’s discourse analysis since it proved difficult to undertake a ‘text surface’ analysis of the various information presented as data to be analyzed in this study.

This was partly due to the fact the researcher used online versions of the newspaper articles, most of which omitted graphical illustration common in hard copy versions of the same. For this reason, the researcher modified the second step to capture content analysis as opposed to Jäger’s ‘text surface’ analysis. Content analysis was found to be more significant in this study than ‘text surface’ analysis.

Modification of Jäger’s discourse analysis framework in congruent with Fuchs & Graf (2010) who also observed that application of Jäger’s ‘surface text’ analysis requires advanced analyses processes and that content analysis is much more significant than surface text analysis especially when trying to build an understanding of the context. As Fairclough (1989) maintained, any discourse analysis should follow the following three dimensions: description, interpretation, and explanation. A modification of Jäger’s ‘surface text’ analysis to capture content analysis thus ensured that all these requirements are met.

Given the large volume of data analysed in this study, the researcher opted for a digital qualitative data analysis, which used MAXQDA. MAXQDA is software that is able to read through volumes of qualitative data and perform an analysis based on the instructed command. The software is also able to code information hence making the data analysis process less tedious. However, researchers have to organize their data in a logical format that is easy for the software to operate with.

For purposes of this analysis, data was first categorised into two broad categories based on the source of the information namely; The New York Times and The Wall Street Journal. The data was further categorized into subsets to ensure that information was arranged in years. A discourse analysis was then carried out as follows using Jäger’s discourse analysis framework.

The first step in the data analysis involved the categorization of articles based on their institutional frame. As Fuchs & Graf (2010) emphasized, a comprehensive discourse analysis requires an understanding of the institutional frame in which a text has appeared (p. 12). An analysis of the institutional frame provides leading information on the intended audience of the article as well as a first understanding of the message in the article.

Categorization of texts in terms of institutional frame also provided an understanding of how institutional norms influence the articulation of discourse. While The New York Times is considered liberal by its readers, The Wall Street Journal is considered conservative. The researcher, therefore, believed that such believes must have influenced the articulation of discourses in texts published in each of the newspapers discussing the same topic.

The second step in the data analysis process involved an analysis of the contents of various newspaper articles discussing the collapse of Lehman Brothers following the recent financial crisis. Unlike the first step, the second step concentrated on the analysis of linguistic contents of the articles, which included both an analysis of ideological statements and linguistic-rhetorical instruments in the texts.

This was achieved through a comparative analysis of linguistic aspects of selected articles on Lehman Brothers. The process involved coding of similar linguistic-rhetorical aspects as well as ideological statements in the texts to be able to analyze how they affected the discussion of the recent financial crisis during the chosen period. Content analysis also provided a framework for examination of the developments in discourse presentation of financial crisis over the years. This was examined by undertaking an analysis of the developments in the presentation of the discussion of the collapsed Lehman Brothers from 2008 through 2010.

The third step involved a critical analysis of linguistic-rhetorical instruments to expose the various textual routines used in the articles. Textual routines include such linguistic styles as metaphors and ellipses, which are basically used to perform certain operations in a text. According to Jäger (2001), identification of textual routines provides additional information necessary for the understanding of discursive threads in an article (as cited in Fuchs & Graf, 2010, p. 17).

The fourth step involved identification and explanation of ideological statements in the articles analyzed. As Fuchs & Graf (2010) observed, ideological statements portrays man’s ideas about his conceptualization of societal normality and truth as expressed in writing. Identification of such statements aids in the interpretation of the meaning in texts (Jäger, 2001). Common ideological statements in texts are analogies and decouplings (Fuchs & Graf, 2010).

While analogies represent similarities in articles, decouplings represent contrasts in the articles. Identifying similar and contrasting ideas in the articles discussing the 2008-2010 financial crisis with a focus on the collapse of Lehman Brothers provided an understanding of how people’s ideas about a phenomenon in the society can either differ or remain the same.

The final step in this analysis involved an integration of the findings from each step in the data analysis process to provide a summarized representation of the findings of the study. This stage also involved the interpretation on the 2008-2010 financial crisis with reference to the collapsed Lehman Brothers. It is also at this stage that the researcher was able to determine whether the study managed to find answers to all the research questions or not. The findings of the study are presented through such graphical illustrations as graphs and pie charts. A comprehensive discussion of the findings from the data analysis process will be presented in the succeeding chapter of this discussion. A discussion of findings is then followed by a summary of finding and finally conclusion and recommendations to sum up the study.

Research Limitations

The researcher believed that the most appropriate approach for this study is a case study analysis. While case study designs narrows down a study to a manageable scope, this study may be limited in its approach as a case study analysis of the public discourse on the collapsed Lehman Brothers may leave out other important linguistic aspects of the general topic. The limitations of case study design have been addressed earlier in this chapter.

The findings of this study may thus not be applicable to other cases under the broad topic of study. The study is also limited by time given the large volume of data to be analyzed within a short time. Some important articles may be left out while trying to reduce the information to a manageable size. Analyzing the linguistic contents of the texts also required a manual analysis of the information, which required a high level of expertise and was time consuming as well. The researcher, however, managed to overcome some of these limitations and carried out this study in such a manner that deemed ethical and professional.

References

Borg, R. W., & Gall, D. M. (1989). Educational research: an introduction. New York: Longman.

Boojihawon, D.K. (2006). International entrepreneurship strategy and managing network dynamics: SMEs in the UK advertising sector. In F. M.,Fai & E. J., Morgan (Eds.), Managerial issues in international business Academy of international business (pp.72-101). New York: Palgrave Macmillan.

Bryman, A. (1984). The debate about quantitative and qualitative research: A question of method or epistemology? The British Journal of Sociology, 35(1), 75-92.

Bullock, R., Little, M. & Millham, S. (1992). ‘The relationships between quantitative and qualitative approaches in social policy research.’ In J. (ed.), Mixing methods: qualitative and quantitative research. n.p: Avebury.

Cohen, A.D. (1996). ‘Speech acts,’ In McKay, S. L. & Hornberger, N. H. (Eds). Sociolinguistics and language teaching (pp. 383-420). Cambridge: Cambridge University Press.

Conger, J. (1998). Qualitative research as the cornerstone methodology for understanding leadership. Leadership Quarterly, 9(1), 107-121.

Denzin, N. & Lincoln, Y. (Eds.) (2000). Handbook of qualitative research. London: Sage Publication Inc.

Denzin, N. K., & Lincoln. Y.C. (1998). Strategies of qualitative inquiry. Thousand Oaks CA: Sage Publications.

Dow Jones (2011). A portal to Dow Jones Factiva to our publisher. Web.

Fairclough, N. (1989). Language and power. London: Longman.

Feagin, J. R., Orum, A. M. & Sjoberg, G. (1991). A case for the case study. Chapel Hill, NC: University of North Carolina Press.

Firestone, W. A. (1987). Meaning in Method: The rhetoric of qualitative and quantitative research. Educational Researcher, 16(7), 16-21.

Fuchs, D., & Graf, A. (2010). “The financial crisis in discourse: Banks, financial markets, and political responses.” Paper presented in the SGIR International Relations Conference, Stockholm.

Geothals, G., Sorenson, G. & MacGregor J. (Eds.) (2004). Qualitative research. In encyclopaedia of leadership. London, Thousand Oaks CA: Sage Publications.

Gratton, C., & Jones, I. (2003). Research methods for sport studies. New York: Ruotledge.

Guba, E., & Lincoln, Y. (1981). Effective evaluation. San Francisco: Jossey-Bass.

Hamel, J. (1993). Case study methods. Qualitative Research Methods, Vol. 32. Thousand Oaks, CA: Sage.

Jäger, S. (2001). Kritische Diskursanalyse. Eine Einführung (3rd ed.). Duisburg: DISS.

Keller, R. (2005). Analyzing discourse. An approach from the sociology of knowledge. Forum: Qualitative Social Research, Vol. 6 (3) Art 32.

Marshall, C & Rossman, G. B. (1995) Designing qualitative research (2nd Ed.) Newbury Park, CA: Sage.

Matveev, A. V. (2002). The advantages of employing quantitative and qualitative methods in intercultural research: practical implications from the study of the persecutions of intercultural communication competence by American and Russian managers. Bulletin of Russian Communication Association, 1 (168), 59-67.

Neuman, W. L. (2003). Social research methods: Qualitative and quantitative approaches, 5th ed. New York: Allyn & Bacon.

Polkinghorne, D. E (2005). Language and meaning: Data collection in qualitative research. Journal of Counselling Psychology, 52(2), 137-145.

Reyes, M. (2004). Social research; A deductive approach. USA: Rex Bookstore Inc.

Shrank, G. (2002). Qualitative research. A personal skills approach. New Jersey: Merril Prentice Hall.

Taylor-Powell, E., & Renner, M. (2003). Analyzing qualitative data. Program development & evaluation. Wisconsin: Madison.

Tellis, W. (1997). Application of a case study methodology. The Qualitative Report, 3(3). Web.