Performance requirements are important to consider when developing a solution. It is unacceptable to have a web service that takes several seconds to reply to a simple request. Therefore, the developer should consider the available methods of improving the performance of their code. Chapter 12 discusses parallel programming, which is a relatively new concept and became popular when multicore processors entered the market. Despite appearing straightforward, parallelism is a complex topic, and writing multithreaded applications can be error-prone and tedious.

Overview

Until the beginning of the 2000s, processor manufacturers had been increasing the performance of their products by increasing the number of transistors and achieving higher clock speeds. The speed of programs was primarily determined by the speed of CPUs. However, it was later discovered that this tendency could no longer be maintained due to several reasons. First, there is a limit to how small transistors can get. Second, when the number of transistors increases, so do the power requirements and implications regarding heat dissipation. A new approach to making programs faster was needed, and the solution was parallel computing. The idea is that several processors can be leveraged to execute a program in parallel, thus reducing the time of its execution. In the contemporary software development industry, parallelism is the desired goal, but there are challenges in implementation.

Concurrency and Parallelism

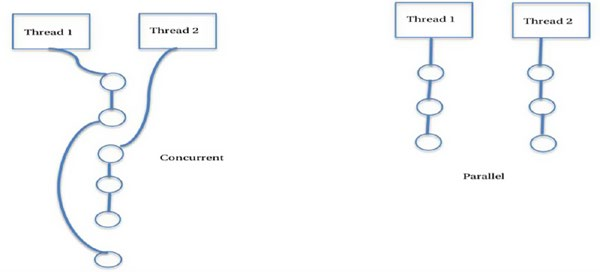

When speaking of parallelism, it is important to distinguish between concurrency and parallel execution. The former is when parts of a program execute either sequentially or in parallel, but the order of execution is not significant. One example is a user interface with a download button. When the button is clicked, the download begins, but the user is free to click other buttons and continue interacting with the application. There is going to be a feeling that the program is running in parallel, even if it is being run on a single-processor machine. Parallelism allows concurrent applications to execute separate tasks on different processors, increasing the overall speed of execution. The following diagram helps visualize the difference between the terms.

Performance gains can be attained with no effort if processes that are run in parallel are independent of each other. For instance, browser tabs have no dependencies between each other and can freely be run on separate cores. However, challenges emerge when different parts of a single program need to be parallelized. In such a case, there are implications regarding what data is shared between portions of code. Therefore, parallelism is not possible without writing a suitable code.

Parallel Programming

The goals of parallel programming are scalability and performance. When the size of data increases, one should be able to add additional processors to achieve the same speed. When the size of the data is fixed, parallelism should increase the speed of execution when more CPUs are added. The fundamental unit of parallelism is a thread, which is a block of code that has all the required components to execute concurrently. When there are multiple threads running, communication takes place through shared variables because threads of a single program share the same address space. A process, on the other hand, is a thread that has its own private address space. Spanning new threads and processes is not a cheap operation and adds an overhead to the program. Therefore, if one believes that creating more threads and processes will yield improved performance, they would be wrong. Furthermore, Amdahl’s law suggests that there will always be a portion of code that is sequential. Therefore, there is a limit to how parallelizable a program can be. Also, there are limitations regarding other system components, such as input/output devices and disk drives.

There are several approaches to parallel programming, depending on the requirements. When the same code can be applied to different portions of data, it is called data parallel computation. When there are different tasks that contribute to one solution, it is called task parallel approach. All tasks share the same memory block, and the strategy can be implemented by spanning a new thread for each task. When separate processes executing on different cores are part of a more extensive solution, then they interact via the message passing model. Most modern programming languages have built-in features developers can use to write parallel code.

Available Constructs

In modern high-level programming languages, the lowest level of abstraction over concurrent blocks of code is threading. Developers can create threads, stop them, and join them to yield the desired functionality. When different threads share the same set of variables, the concept of synchronization can be introduced. Also, when concurrent parts of a program need to have exclusive access to some portion of memory, they can lock it using mutual exclusion strategy. In object-oriented programming languages, such as Java, mutex objects can be created to implement the functionality.

In lower-level languages, such as C and C++, parallelization can be achieved at build time. Current versions of C and C++ compilers allow sequential code to be modified to accommodate parallelization. However, it is rare that this functionality yields tangible benefits. When a developer knows which parts can be parallelized and how it can be done, they can use the OpenMP application programming interface. The developer annotates the code using OpenMP pragmas and directives to indicate which portions of the code needs to run in parallel.

Real-World Applications

Concurrency and parallelism are essential features of any modern software solution. One common example where concurrent execution is evident is an application with a user interface. For instance, users expect that a mobile application stays responsive when the program is handling network connections. If all tasks are executed on the same thread, then the interface has to freeze whenever the program performs other duties. Also, when users launch a mobile application, they expect that notification will arrive whenever someone calls them. Without parallelism and concurrency, the majority of the functionality of contemporary operating systems would not be possible. Multitasking can be achieved only if the system supports parallel and concurrent execution. Therefore, the content of this chapter is important for becoming a proficient software developer.

Conclusion

Chapter 12 discusses parallelism and concurrency and explains the motivations behind their emergence. System performance from a hardware perspective cannot be infinitely improved due to limitations regarding heat dissipation and power consumption. Therefore, it was clear that programs needed to be written in a more efficient manner. Having separate parts of the program execute on different processors has become a common way of increasing performance. This chapter explains the importance of parallelism and provides an overview of available models and tools to implement concurrency and parallel execution in software solutions.