Introduction to Markov chains

A Markov chain is a stochastic (random) process representing systems comprising multiple states with transitional probabilities between them. A stochastic process is a “mathematical model, which is scientifically proven, that advances in subsequent series that is from time to time in a probabilistic manner” (Miller & Homan 1994, p. 55). Precisely, the subsequent state of a given system depends only on the present state, but not the preceding state. This mathematical approach offers a good method of evaluating probabilities, state transition periods, and final distributions of subjects in systems with high dependencies. In contrast to the other analytical tools used in risk assessment, Markov chain is applicable to systems with overlapping states. There are two main types of Markov chains namely, homogenous and non-homogenous.

Markov chains originated from the Markov stochastic process used to model an extensive assortment of issues related to consistency and maintainability. This mathematical analysis owes its existence to a Russian mathematician, Andrey Markov’s scientific and mathematical analyses to determine how processes correlate to each other using a variety of models (Miller & Homan 1994, pp. 52- 58). Typically, the Markov mathematical system undergoes alterations from one form to another, which also involves a connection from a predetermined state or countable number of probable states. Markov initiated the theory of “stochastic processes using different mathematical models between the years 1856-1922 when the mathematician invented the processes” (McLachlan & Krishnan 1997, p. 99). Markov chains can also refer to discrete-time Markov chain or DTMC. Discrete random process involves systems, which change from one distinct step to another depending on the present condition. Steps in which the discrete changes occur can refer to sequences estimated with time and can sometimes refer to physical distance or even any substantial form of measurement that can present a distinctive change in a state.

Markov chains can naturally refer to how sequences or states of experiments subsequently depend on each other from one given time to another in the process of development. For one to consider a stochastic process to be a Markov chain, two factors are inherent. Firstly, “the outcome of the experiment must include one of the set of discrete states…secondly; the outcome of the experiment must reveal evidence of dependence on the present state, and not on any of the past states” (Wesley 2003, p. 69).

Homogeneous Markov chain

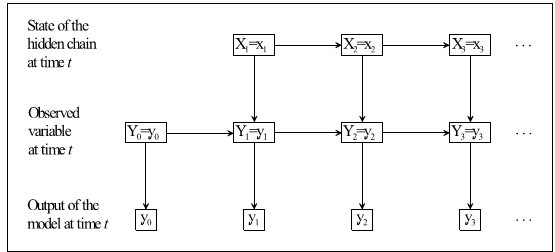

As postulated before, there are two basic types of Markov chains known as homogenous and non-homogenous Markov chains. In normal circumstances, two distinct principles are eminent in Markov chains where sequence of trial of experiments occurs. For a process to become clear to be a Markov chain, “the outcome of the experiment must include one of the set of discrete states, on the other hand, the outcome of the experiment must reveal evidence of dependence on the present state, and not on any of the past states” (Wesley 2003, p. 76). From the definition, Markov chains can also refer to discrete-time Markov chain or DTMC. Homogeneous Markov chains can refer to DCMM type, in which the external conditions represented never change, therefore demonstrating one hidden state and one transition matrix. DCMM refers to Double Chain Markov Model where it relies on first-order dependences. It has an external condition that cannot change. For instance in an equation comprising of X and Y as presented in the figure below, X denotes the hidden variable and Y represents the observable variable.

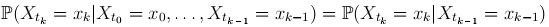

The conventional feature of the two-Markov chain random processes entails the fact that the property of its sort of processes retains the no memory aspect where the state has no idea of its past, but depends on the present. This assertion means that it is only the present form or state of the process that is capable of influencing where the state goes next. In simple terms, “a homogeneous Markov chain is one where the state-to-state transition probabilities remain constant over time (i.e. cycles) and across subjects” (Craig & Sendi 2002, p. 33). A discrete-time random homogenous Markov model entails a model that remains constant in a given form at every given step, with alterations only seen taking place arbitrarily at every successive state. According to Craig & Sendi (2002), “the key to the Markov model is the Markov property, which states that given the entire past history of the subject, the present state depends only on the most recent past state” (p. 33).

Non-homogeneous Markov chains

One of the common types of Markov chains that are now globally renowned with its applications widely consumed is the non-homogeneous Markov chains. Craig and Sendi (2002) posit, “A non-homogeneous Markov chain is one where these probabilities vary over time and/or across subjects” (p.33). Non-homogenous Markov chains are contrary to the homogenous chains where one of them changes and the other remains constant over a given period of time and conditions. In spite of being an old mathematical approach, which was invented in the early 18th centuries, “there has surprisingly existed little written on estimating the probability of a homogeneous model or a non-homogeneous model over time, which is a product of homogeneous chains” (Craig & Sendi 2002, p. 34). However, researchers have managed to distinguish components of the non-homogenous and literally produced some analyses. Currently, there is no credible expression of non-homogenous Markov chains and thus difficult to determine its sequence.

Continuous-time Markov chain

Continuous–time Markov Chains (CTMCs) as portrayed in probability theories refers to the stochastic process where the state transition or conversions are capable of occurring at any given moment and in sequential manner. Typically, Continuous–Time Markov Chains (CTMCs) occur in constant stochastic process with the time between the transitions remaining exponentially distributed. Continuous–Time Markov Chains (CTMCs) are more like the discrete time Markov chains. The slight difference exists in the time aspect. In Discrete Time Markov Chains (DTMC), the chain spends precisely one unit in any given state, while in Continuous–Time Markov Chains (CTMCs); a single Markov chain spends a haphazard amount of time in each given state. It takes the mathematical expression (Xt, t ≥ 0), where X is the state space, Xt is the random variable on the state space X and t is the time aspect. The expression below demonstrates the state of Continuous-time Markov chain as portrayed by Miller & Homan (1994).

Semi-continuous-time Markov chain

Another important type of markov chain under the non-homogenous Markov Chains is the Semi-continuous-time Markov chain. Semi-continuous Markov chains refer to the stochastic process where the state conversions are capable of occurring in broken sessions with its time appearing in inconsistent manner. Semi-continuous Markov chains can also refer to Semi-Markov chains with stochastic processes that possess finite or countable set of states with broken steps that occur in their trajectories. Typically, the Semi-continuous Markov stochastic processes have jumps within given times and the process therefore occurs in exponentially undistributed times. Within the given broken potions, the process produces Markov chains with transition probabilities. The stochastic process of Semi-continuous Markov chains X (t) has finite and countable states.

History of Markov chains

The mathematical cum scientific process of Markov systems began in the mid of the 18th century, from the mathematical research undertaken by Andrey Markov. According to prior studies, this mathematical analysis resulted from Russian mathematician, Andrey Markov’s scientific and mathematical analyses to determine how processes correlate to each other using a variety of models. After a sequence of successive experiments to determine the interdependent of variables or states or even components from one generation to another, Markov initiated the theory of stochastic processes using different mathematical models between the years 1856-1922, when the mathematician invented the processes (Anderson & Goodman 1957, pp. 89 – 110). Typically, the Markov mathematical system undergoes alterations from one form to another, involving a connection from a predetermined state or countable number of probable states. However, much of the applications of Markov chains became clear in the 19th century where the science of successive dependence between states became apparent in several life sciences including the human sciences.

Current Applications of Markov chains

Since the advent of the mathematical models expressed in Markov chains systems, the field of science has grown ostensibly realising the imperativeness of Markov mathematical model in real life experiences. Scientists have discovered the inclusion of Markov chain in the systematic application of scientific models into practical life experiments. Markov chains can be significant in its application from its theoretical perspective to empirical evidence. Empirical; evidence on the use of Markov chains streamed in the subsequent year after Markov chains become clear demonstration that the science would be important in different spheres of life. Several phenomena can explain how Markov chains are applicable in the current world. Typically, Markov chains have proven significant in its dynamism with several lives phenomena depending on this system to explain the existing science. Currently, Markov chains can be applicable in the field of medicine and medical sciences (in determining immune response, medical prognosis, contagion, genetics, medical research and other applications including housing patterns, voting trends, criminology, random walk among others).

Application in medicine

The field of medicine is typically a dynamic segment that involves a continuum of medical practices with numerous applications. Markov chains have significantly played a substantial role in explaining several medical applications that individuals in the medicine department and common scientists could find impossible to explain the existing correlation between states. According to Craig and Sendi (2002), “Markov chain is currently the most popular model used for evaluating interventions aimed at treating chronic diseases” (p. 33). One of the renowned applications of Markov chain stochastic process is in the life sciences, a discipline incorporated in the medicine segment where real biomedical practices appear coherently distinguished. The application of Markov chain in medicine stretches from various angles of biomedical practices in this section of this report, some may include:

Immune response – One of the most famous applications of Markov chains is predominantly in the study of immune response. A study of immune response has been useful in several scientific scenarios where the immune responses have been differing from one individual to another, typically depending on the status of the previous generation. According to the science of immune response, the offspring’s have always proven to possess greater immunity than the parents do in successive periods. A research of immune system reaction in rabbits has actually proven imperative in understanding how the phenomenon of Markov chains correlates to immunity in rabbits. One scientist classified “immune response in rabbits into four foremost groups based on the strength of each of the response” (Wesley 2003, p. 146). On each successive weak, the rabbit’s characteristics changed from one given group to another in matrix form.

Research with mice – Extensive research in medical practices has always evolved with the use of mice and guinea pigs in practical laboratory tests to examine the effect of certain drugs or even the impact of certain chemicals before their proven safety. Markov chain stochastic process has been critical in several experimental techniques including medical experiments to ascertain the impact of medicine or chemicals, for instance, a large of mice kept in a certain place (Sonnenberg & Beck 1993, pp. 322 -338)

Contagion/poisonous disease – Under real life circumstances Markov stochastic process reveals possibilities of certain health issues affecting human beings. According to research by Craig and Sendi (2002), “chronic diseases can often be described in terms of distinct health states and the Markov chain is a simple yet powerful model to describe the progression through these states” (p. 33). Typically, under certain circumstances, “the probability of a person suffering from a particular contagious disease and die from the infections of the disease is take for example 0.05, and the contrary probability of getting infection and survive is 0.15” (Berg 2004, p. 86). On the other hand, “the probability that an existing survivor will infect another person who is capable of dying from it is also 0.05, and the probability that the same person will infect another and survive is 0.15 and so on” (Berg 2004, p. 88). A transition matrix on this information can indicate a possible dependence of one state to another with the probabilities changing with time.

Housing patterns – Markov chain stochastic process can describe the form of living especially in the contemporary world. Empirical evidence conducted on a survey of housing patterns has demonstrated relatively appropriate correlation between Markov stochastic patterns and the housing patterns in the world. Based on a survey conducted to investigate changes in urban centres, research established that “75 per cent of the urban population lives in single-family dwelling and the rest, 25 per cent had been living in multiple housing” (Miller & Homan 1994, p. 55). In a span of at most five years, the trends in housing were changing with one circumstance depending on the other. After five years on a follow-up survey, “those who had been living in single-family dwelling, 90 per cent were still in those houses and 10 per cent had moved from single to multiple housing” (Miller & Homan 1994, p. 55). The trend in housing among individuals in urban centres is likely to keep changing with time.

Language – language is an inherited factor that possibilities of its positive evidence becoming clearer from one character to another, with several language characteristics passed from one offspring to another. One is capable of predicting the future language of a newborn by determining and assessing the level of parental interaction with the child. The more frequently the child interacts with individuals with a certain language the more probable that the child will definitely adopt the language. According to Wesley (2003), “one of the many applications of Markov chains is in finding long-range predictions…It is not possible to make long-range predictions with all the transition matrices, but for a large set of transition matrices long predictions are possible” (p. 6).

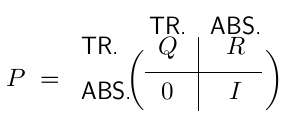

Solving problem in the case of absorbing state

The absorbing Markov chain is a stochastic process where the initial state does not change. A markov chain is in absorbing state when it is in the state that every state in the system is capable of reaching the absorbing state. In the science of Markov chains, the state that is incapable of absorbing refers to transient. To solve the problem where the state is continuously absorbing it is important to consider the canonical form where one can renumber the states to enable the transition comes first. For instance if there is an absorbing state R and a transient state T the absorbing state will have the solution by interchanging the digits to obtain a canonical form.

Limitations of Markov chains

A Markov stochastic process does however possess several limitations that under certain circumstances bestows to the limitations of its application in the modern healthcare science (LePage & Billard 1992, p. 206). In the aspect of coinciding, Markov chains have demonstrated a level of failure in certain circumstances, thus, limiting its application in the field of life sciences. Craig and Sendi (2002) assert, “To assess the cost effectiveness of a new technology, modelling techniques are unavoidable if resources limit a formal study and data from different sources must be combined” (p. 38). Due to several literatures dealing with the aspect of Markov chains in different perspectives, it has become difficult for researchers to find precise details over the chains (McLachlan & Krishnan 1997, p. 127). The Markov chains have in fact deemed unreliable in determining the probabilities of diseases under given circumstances as the aspect of converging or coinciding is inconsistent. In some cases, the changes in the states converge earlier than expected while in some cases they delay in coinciding, making the scientists find no reliability in the Markov chains.

Properties of markov chain models

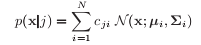

Given the several numbers of markov chains identified in the available literature, they have some common shared properties in their processes. Markov chains possess absorbing state where the transition process in the stochastic process remains in the default state, a state with no definite change. A Markov chain is absorbing if all its state finals remain in single form. Another important property in the Markov chains is the non-absorbing characteristic where the final states portray significant difference with their initial states. A Markov Chain is non-absorbing if the final states differ with the initial state. Markov chains also have the property of recurrence where the state is non-transient in a manner that it has finite hitting time within its probability. The state is therefore recurrent or persistent. Markov chains can also have the characteristic of hidden markov. Hidden markov process normally involves the ∑ appearing in discrete or continuous alphabet.

Reference List

Anderson, T & Goodman, L 1957, ‘Statistical inference about Markov chains’, Annals of Mathematical Statistics, vol. 28 no. 1, pp. 89–110.

Barbu, V, Bulla, J & Maruotti, A 2012. ‘Estimation of the Stationary Distribution of a Semi-Markov Chain’, Journal of Reliability and Statistical Studies, vol. 5, pp.15-26.

Berg, B 2004, Markov Chain Monte Carlo Simulations and Their Statistical Analysis: With Web-based Fortran Code, World Scientific, Monte Carlo.

Craig, B & Sendi, P 2002. ‘Estimation of the transition matrix of a discrete-time Markov chain’, Health Econ, vol. 11 no.1, pp. 33-42.

LePage, R & Billard, L 1992, Exploring the Limits of the Bootstrap, Wiley: New York.

McLachlan, G & Krishnan, T 1997, The EM Algorithm and Extensions, Wiley: New York.

Miller, D & Homan, S1994, ‘Determining transition probabilities: confusions and suggestions, Medical Decision Making, vol. 14 no. 1, pp. 52–58.

Sonnenberg, F & Beck, R 1993, ‘Markov models in medical decision-making: a practical guide’, Med Decision Making, vol. 13 no.1, pp. 322–338.

Stewart, W 2009, Probability, Markov Chains, Queues, and Simulation: The Mathematical Basis of Performance Modelling, Princeton University Press, North Carolina.

Wesley, A 2003, Markov Chains: think about it, Pearson, New York.