Literature Review

Multiple papers from the proceedings of the academic research venues, books, and journals on the security of using Kubernetes in cloud computing. The areas examined in this review include security features, threats, vulnerabilities, solutions, standards, and applications. Kubernetes have continued to make it possible to automatically scale, building fault-tolerant and cost-efficient systems. Sultan et al. (2019) indicate that Kubernetes has quickly emerged as the leading cloud technology since it makes the deployment of modern applications possible. As a result, significant studies have examined the designs and applications of Kubernetes, especially in cloud computing, alongside other enabling technologies. Thurgood and Lennon (2019) argue that the shift in paradigm focus from traditional virtualization to container-based virtualization solutions has increased in recent years. Running the process requires an isolated environment which is created by the ability of containers to utilize kernel features. Among the enabling technologies identified by the technologists include Docker, container orchestration, and Kubernetes.

Dupont et al. (2017) defined a docker as an open-source project that offers standardized solutions that enable ease in Linux applications implementation into portable containers. The application of Docker is oriented and well with the micro-services environment, such as the internet of things. For container orchestration, Hoque et al. (2017) observe that they expedite the feasibility of Docker to run applications that are containerized over many hosts in clouds. The operation of multiple containers in different hosts and clouds is enabled by cluster architecture in containers, something that is inevitable in smart cities. Kubernetes is used for monitoring and IoT application management as an available orchestration platform. It is based on this that Dupont et al. (2017) examine Kubernetes as a multi-host platform for container management that uses a master to manage Docker containers over multiple hosts.

Professionals in technology began to examine cloud computing with Kubernetes cluster elastic scaling, where they observed that ubiquitous computing compatible devices are typically small for them to remain unobtrusive. As a result, their processing power and ability to run applications based on Artificial Intelligence (IA) is limited. Muralidhara et al. (2019) indicate that Kubernetes cloud computing platforms host containerized AI applications that communicate with ubiquitous computing devices. It is possible that smart homes have now been connected via sensors to containerized Al-based applications which run on Kubernetes so as to improve the living experiences of the users where they can automatically adjust the environment within the home. Living experiences and automation have been improved by the ubiquitous computing within the home, which is powered by Kubernetes, and the ability to auto-scale. However, technologists have documented multiple challenges and issues related to using Kubernetes in cloud computing.

A recent study by Pahl (2015) found that 78% of companies the users of the open-source container orchestration tool in production. The investigation identified security, storage, and networking as among the top challenges faced by the use of Kubernetes in cloud computing. As a result, Saxena (2020) focused on the issues related to security, highlighting the complexity of the technological systems as intimidation of many organizations. Many companies tend to develop cloud-native strategies, including the platforms and tools to be used to ensure the safe deployment of cloud-native applications. However, even as this happens, Al-Dhuraibi et al. (2017) observed that many security challenges and issues arise threatening the application of Kubernetes to facilitate cloud computing. Among the issues highlighted include hardening and compliance, which requires one to hyperfocus on important aspects such as pod security policies in securing a multi-tenant cluster. According to Sayfan (2017), it is challenging to understand the default features because of the feature-rich Kubernetes platform because of the need to comply with the pod security policies. The researchers, Sayfan (2017), identified the use of security benchmarks within organizations as a way of helping to harden Kubernetes. The configuration guidelines provided by the Center for Internet Security allow the hardening of the systems, which include Kubernetes, against emerging cyber-attacks.

Managing configurations of all workloads deployed on the cluster have been identified as relating to security. Pahl and Lee (2015) observed the need for organizations to manage the configurations of all the workloads that run on the cluster. The operators have to package, manage, and deploy complex applications that are supposed to run Kubernetes. The operators have to take human operational knowledge and encode it into the software, which is packaged with the application and shared. This, therefore, calls for ensuring that services are provided for Kubernetes in maintaining supported configurations. A significant issue identified by Saxena (2020) is related to the management of multi-tenant clusters indicating that it becomes more difficult to manage workloads that are deployed on the clusters and the clusters themselves. However, the researchers indicate that what could become chaotic can be handled easily by multi-tenancy. Radek (2020) identified balancing security and agility as the challenge considering that organizations face challenges constantly innovating, making it easy to treat security as a second option. Organizations can gain from security if they explicitly add sec into the middle of the DevOps cycle, or sometimes they have to build increased security automation into the pipelines as possible. According to Vohra (2017), the orchestration problem currently is the lack of a solution that can be accepted widely, causing Docker to develop an orchestration solution making Kubernetes a relevant project and a comprehensive solution. The cloud applications offer support to multiple features, which include the interoperable application and infrastructure cloud services description where the implementation is as containers hosted on nodes in an edge cloud.

According to Sultan et al. (2019), advanced network support is required by the containers in distributed systems. However, the containers tend to create an abstraction making each container a self-contained computation unit. In the traditional setup, Pahl and Lee (2015) observed that the containers were exposed on the network through the address of the share host machine. In the case of Kubernetes, each of the containers’ groups has a unique IP address that can be reached from any other pod in the cluster regardless of whether it is co-located on the same physical machine or not. For this to be accomplished, advanced routing features are required for network virtualization. Another issue that Sayfan (2017) identified as data storage, especially in the management of the distributed container beside the aspect of the network. Flexibility and efficiency also hamper the managing of containers in Kubernetes clusters because of the need for pods to use their data to co-locate. Saxena (2020) argued that the container-based multi-PaaS can be a solution to the management of distributed software applications in the cloud though it still experiences some challenges.

Safety Concerns, Risks, and Resolutions Using Kubernetes

The widespread application of Kubernetes is a testimony to the organization’s trust in its capability to tackle the sophistication of current application development and transformation initiatives and perform so at scale. Based on a recent study of about 1,350 technical specialists in corporations of all magnitudes performed by the Cloud Natural Foundation, 76% of plaintiffs utilize the open-source container instrumentation tool in manufacture (Sultan et al., 2019, p. 52976). This is high from only 56% the previous year. Yet, whereas Kubernetes is among the most known development on GitHub, the majority of institutions are still frightened by its sophistication (Radek, 2020, p. 39). Corporations have viewed the worth of constructing microservices distributing applications as discrete practical parts, which could be conveyed as containers and controlled separately. But for each application, there exist several sections to manage, particularly at scale.

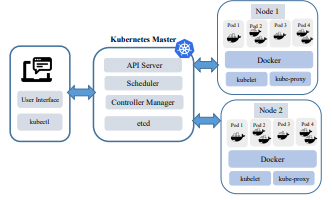

Kubernetes unravel the issue by issuing an extensible, declaratory platform that mechanizes the leveraging of containers for elevated availability, scale, and resiliency. But they are huge, complex, drastic moving, and most times confusing program that demands users to learn new skills, for instance as shown in figure 1 below. Organizations are advancing their cloud proposal plans, involving what programs and instruments they must utilize to ascertain the secure disposition of natural cloud apps, which may benefit from comprehending Kubernetes safety issues, which are explained below with their recommendations for mitigation.

Hardening and Conformity

While utilizing Kubernetes, one should be intensively focused on whatever is and what is not switched on by default. For instance, pod safety policies are fundamental to safeguarding a multi-tenant bunch, yet the feature is still a beta version and is hardly switched on by default (Radek, 2020, p. 8). The element-rich Kubernetes plan can cause it to be challenging to comprehend the default facets, but it is usually vital to decipher. Companies can apply safety benchmarks to assist harden Kubernetes. The core for Internet safety, for instance, gives configuration directions to fortify structures involving Kubernetes versus developing cyber threats. Possessing this type of systematic checklist can help in securing a scheme as complicated as Kubernetes. Although security is continually about peril management, institutions require assessing the effect of specified settings on functioning and measuring the dangers against the benefits.

Some institutions lack the skillsets needed or duration to harden Kubernetes. It could be a big task to certify that the wished configuration of the bunch is set and upheld to involve thins like ascertaining access to the API server is continuously over HTTPS, which X.509 documentations are utilized to authenticate conveyance betwixt platform elements, or certifying that etcd information store is coded (Sultan et al., 2019, p. 52980). Companies that are overawed by this comparatively brief categorization may wish to deliberate a commercial enactment of Kubernetes that has at present conducted the hardening.

Vulnerabilities

Everyday deeds of renowned susceptibilities involve crypto mining, privilege escalation, other malware installation, and host access. The issue is universal, with Docker Hub at a point having to eradicate 17 intimate images once they have been accessed from the Internet 4 million times. Whereas photo scanning at the build stage is mandatory, susceptibilities pose a safety danger to executing deployments (Sultan et al., 2019, p. 52980). Efficient weakness control spans the whole container life sequence and should:

- Detect exposures in images, involving in installed functioning system programs and runtime archives for encoding languages

- Protect pictures with dangerous weakness from getting forwarded to manufacture available container registry

- Stop builds possessing fixable susceptibilities above a specified severity

- Manage third-party or custom admission regulators in Kubernetes bunches to protect the arranging of exposed container images (Sultan et al., 2019, p. 52980).

Runtime Threats

The runtime stage is crucial for container safety since it brings a current set of safety challenges. If an organization has shifted protection and minimized its sanctuary danger from susceptibilities and misconfigurations, then the main risk at runtime will probably emanate from outside adversaries (Sultan et al., 2019, p. 52981). To mitigate this peril, the following things should be done.

- Monitor runtime action – commence with checking the most safety-relevant container actions like process actions and network messages amongst containerized provisions and betwixt containerized amenities and outside customers and servers.

- Control the declarative data – utilize the build and distribute duration data to assess observed against anticipated process during runtime to identify a suspicious process.

- Restrict unnecessary network announcement – runtime is while you can view what sort of network circulation is permitted against what is needed for the app to work, giving the corporation the chance to eradicate unnecessary connections.

- Utilize process allow-lists – scrutinize the app for a duration to detect all activities implemented in the typical progression of the app manner, then apply the list as the organization’s categorization against forthcoming behavior.

Handling Configurations of Entire Workloads Arranged on the Group

Besides leveraging the configuration of the bunch, institutions require to control the conformations of entire workloads contending on the group. One can utilize the open-source instrument Helm Charts to mechanize application configuration and provisioning for Kubernetes on top of deploying easy or complicated applications created by numerous separate services (Radek, 2020, p. 42). Although Helm is vital for leveraging Day 1 activities, it hardly extends to Day 2 deeds, where Kubernetes activities shine. Operators simply deploy packages and control sophisticated apps structured to function on Kubernetes. They take human working knowledge and encrypt it to a software system that is packed with the app and shared. They enhance the easiness for application creators to provide mechanized life sequences for the amenities that function on Kubernetes (Sultan et al., 2019, p. 52982). Helm Charts could also be packaged in operator Kubernetes and applied together. In the aspect of security, operators certify that services arranged on Kubernetes uphold backed conformations; if an unsubstantiated alignment is used to an organized service, operators can reorganize the provision to its natural, declared conformation. The certainly exciting aspect is that one can utilize Kubernetes operators to control Kubernetes itself, triggering the effortlessness to distribute and automate safeguarded deployments (Radek, 2020, p. 8).

Controlling Multi-tenant Constellation

While Kubernetes scales, it develops more and more hard to control all workloads organized on the bunches and the group themselves. Multi-occupancy is among the most efficient methods to manage what could simply become disordered. Certainly, Kubernetes’ backing for multi-occupancy has come from far. Key competencies involve the following, which are not continually switched on by default.

- Namespaces: They permit companies to isolate numerous teams from similar physical clusters when utilized with RBAC (explained below) and network strategies (Sultan et al., 2019, p. 52982).

- Role-grounded Access Control (RBAC): Establishes if an operator is permitted to perform a provided action within a bunch or namespace. To simplify the application of RBAC, default roles exploitation is deliberated and guaranteed to operators and categories cluster-broad or in the vicinity per namespace.

- Resource Shares in Kubernetes: They limit aggregate source exploitation per namespace, making structures less susceptible to incidents like denial of provision. By default, ultimate sources in the Kubernetes bunch are made with boundless memory requests and the central processing unit.

Balancing Safety and Agility

The weight is on the institutions to continuously revolutionize, which enhances the treatment of security as a reconsideration. Ideally, safety is created into the DevOps sequence, yet institutions will advantage by openly inserting ‘sec’ in the mid of DevOps or advanced another way, by creating as much safety mechanization into the line as possible. To certify that application dev institutions can execute safety best activities, one must take a step back and reexamine the CI/CD pipelines (Radek, 2020, p. 12). Are they applying the robotic unit and purposeful tests? Have they incorporated automatic safety gates like integrated susceptibility scanners? Ops can assist with DevSecOps by adding features to mutually develop and manufacture clusters that cause it to be easier for the app team to control monitoring, awareness, and logging provisions consistently while evolving and implementing microservice-grounded apps (Muralidharan et al., 2019). Furthermore, while progressing a facility mesh to a Kubernetes scheme might appear to add difficulty, it is essentially making crucial corporate logic more noticeable.

Comparison of Azure, AWS, &GCP

Kubernetes is influential container instrumentation that allows mechanization, scaling, and controlling container deployments. Containers are movable possessions that permit individuals to design and employ little costs from the development group (Sayfan, 2017, p.4). They solve more of the expenses for installing containers. They take the colossal cipher base and convert it to numerous lightweight components that can be controlled quickly and interconnected without the dread of a single module consuming the entire application. The trouble of multiple moving parts is the difficulty of keeping a trail of them. If a container has links to another, one must never forget to update every unit to ascertain stability throughout the platform (Zhou et al., 2021, p. 1). Another distinction of betwixt Kubernetes and other disposition choices is that deployments by containers are continuous (Radek, 2020, p. 8). There is hardly a wait for amassing and them distributing binaries each unit at a time. Rather, Kubernetes is continually pushing current variances to containers and distributing in the setting. It is a method to quickly deploy cypher without ceasing productivity at a specific duration of months. Bottom line, containers and Kubernetes overall are a regular match for cloud environs. In precise, containers are a method to attain a multiple cloud policy since they can be encountered mutually on enterprise and the primary cloud providers. This cross-conformity makes vessels an appealing option for diminishing the danger of assuming small services in the cloud (Radek, 2020, p. 3). Microsoft Azure, GCP, and AWS all possess services that can create highly accessible deployments of Kubernetes. The following are descriptions, pros, and cons of the elements.

Amazon Web Services

It is a cloud provision created by Amazon and has the majority of zones beyond the three cloud provisions assessed with 16 areas across the universe (Englund, 2017, p. 9). AWS is the very mature shared cloud, and a plurality of corporates continually possess a well-recognized presence on the platform. They contain their patented container orchestrator, elastic container service (ECS), yet it is distinct from Kubernetes (Radek, 2020, p. 7). AWS offers reliable, flexible, scalable, cost-effective cloud computation solutions. The computing program issues an enormous gathering of cloud provisions that create a fully-grown platform. Kubernetes Operations (kops) has developed the actual standard for making, upgrading, and controlling Kubernetes bunches on AWS. Kubernetes Operations is a properly upheld open-source development with a lively community.

Pros

Amazon Web Services have many detailed pros. First, it is cost-effective compared to other models. It provides economical pricing, which is quite much affordable. Secondly, it is adaptable; its model aids in scaling possessions down or up, which implies the business hardly has to encounter any apprehension when capacity is a problem or while necessities keep changing (Madhuri & Sowjanya, 2016, p. 6). There is no need for wild guesses or capitalizing in certain scientific assessments to realize the needs of a company’s framework. Last but not least, it is providing more security. Safeguarding corporations from possible data loss and the danger of cybercrimes is among its primary objectives. The model possesses well-perceived conformity affirmations and adheres to safety rules around the globe. The detail that institutions like HealthCare.Gov, NASDAQ utilize the AWS program is enough proof that portrays how trusted and safe the cloud service is.

Cons

The initial disadvantage of AWS is the aspect of computing glitches. Cloud-based provisions still malfunction, and this model is no exclusion. Whereas the service is well-designed and robust, it is hardly faultless and subject to overall cloud computing malfunctions (Madhuri & Sowjanya, 2016, p. 6). Secondly, Amazon is situated in America, and it is country-specific. So, every service publicized might not be accessible in each organization or individual’s specific locality if they are situated outside of the United States of America. Last but not least is the lack of intellectual assets protection. AWS withdrew a client agreement clause in 2017; this section had formerly functioned to prevent Amazon from copyright breach regarding patents.

Microsoft Azure

Azure container provision (ACS) permits for fast distribution of Kubernetes to Azure. Microsoft created it, and ACS is the latest tool of the clouds to back Kubernetes. It functions just like a distribution template because it hardly involves elements to upgrade a bunch once it has been organized (Madhuri & Sowjanya, 2016, p. 5). To improve clusters utilizing ACS, one would require to apply ACS to make a current cluster, voyage containers into it, and exterminate the outdated cluster (Englund, 2017, p. 9). One excellent facet of Azure is that constructing templates is more straightforward and can be achieved from the available infrastructure. Once the whole services are made and configured, a snapshot can be taken essentially and redeployed once more. Those conversant with Amazon’s feature development templates will realize that the system of Azure is much easier to utilize.

Pros

The aspect of high availability makes Azure to be desired by organizations (Radek, 2020, p. 7). Unlike other traders, the Azure cloud gives high redundancy and availability in information centers on a universal scale. Due to this, it can issue a provision level contract of 99%, which most businesses lack. Secondly is the provision of data security. It has a robust focus on safety, pursuing the standard sanctuary model of identity, assess, diagnose, calm, and close. Paired with powerful cybersecurity controls, this paradigm has permitted one to attain numerous conformity certifications and establish it as a leader in safety. Last but not least is the scalability advantage. It makes it simple to scale calculate power down or up with a mere click of the button.

Cons

The weakness of this model is that it demands platform expertise. It needs the knowledge to certify that all portable parts function mutually effectively (Madhuri & Sowjanya, 2016, p. 6). Secondly, Microsoft Azure needs proper management. Dissimilar to SaaS models whereby the end-operator is exploiting information, for instance, Office 365, Azure shifts an organization’s compute influence from the data center to the cloud.

Google Cloud Platform

Google Vessel Engine (GKE) is the platform’s-controlled Kubernetes service. Google is the top contributor to the Kubernetes open-source scheme. It leverages the Kubernetes principal nodes for organizations in GKE, implying that one does not really have to approach them but also, they are hardly charged because of their compute sources. If an organization’s cluster advances above 4 nodes, it charges around $99 per month, and this figure is not any different with what it would charge to execute one’s master nodes (Englund, 2017, p. 9). Another aspect that isolates GCP is producing a universal spanning load haltere integral autoconfigured when provisions are created (Mitchell et al., 2019, p. 1). This load halter is similar to that controls YouTube and Google. On Azure and Amazon, the load haltere is merely another container example that one demands to scale.

Pros

- Isolates away and controls Kubernetes controller nodes (Radek, 2020, p. 8)

- Nodes utilize container enhanced image

- They improve Kubernetes controller via the GCP control console

- They enhance, add, or eliminate worker nodes via GCP running console

- Global straddling load halter built-in

- Optional backing for Kops

- Streamlines incorporation with Code fresh

- Better pricing compared to competitors

Cons

- Incapable of logging in to Kubernetes principal nodes and vary advanced settings.

- Small elements and complex to start

- Out of uncontrolled tier, the whole lot costs

- Lacks qualities compared to Amazon Web Services

Description of prototype build

The main prototype used and tested in the project is a specifically designed architecture that ensures the transmission security of the information in the Kubernetes Cluster on a controlled Cloud Vendor environment. An open-source version control system was used as a source of configuration description for deployment automation systems, as well as for scaling microservice applications (Hoque et al., 2017). Kubernetes is integrated alongside Sealed Secrets, Kube-applier, and Git to ensure there is enough protection of the information in the version control system. A tool Kubeseal on the user side and a controller on the Kubernetes’ cluster side are the two parts that make up the Sealed Secretes. The controller has to start by generating encryption keys which include both private and public, before storing them in the cluster. The cluster objects of type SealedSecret is decrypted, creating, deleting, and modifying the Secret Type objects to allow the recording of the decrypted information.

For the cluster setup, it is a replicate of the environmental scenario with multiple applications which are hosted. A similar prototype was deployed, and the scenario was evaluated experimentally. The considered setup consisted of three sets of different machines with different capacities, which then host the docker images to imitate various specifications of the internet of things nodes (Hoque et al., 2017). This is illustrated in figure 2 below. The go-ipfs docker base images were installed in all three different sets of devices.

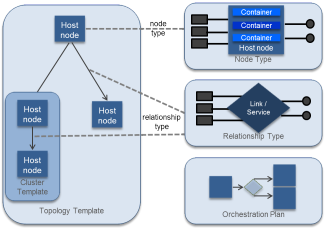

Kubernetes was installed in all the machines, and a master node-enabled in the Macbook to allow the orchestration of the containers and after the docker images were created. The rest of the machines are controlled by the master node since it is the principal node, while the rest of the nodes are execution nodes. The Heapster was used to enable monitoring of the IoT applications that were clustered under the Kubernetes platform (Mitchell & Zunnurhain, 2019). A web GUI-based Kubernetes Dashboard in the master is enabled by Heapster, helping in the monitoring of the system resource metrics. This allows the collection of the resource utilization information of every node, and the information gathered can be viewed in the Kubernetes dashboard. There is no commonly accepted solution for the problems associated with orchestration, but this can be illustrated using a possible solution. Since Kubernetes is a relevant project, a comprehensive solution involved a Docker orchestration that is based on the topology supported by the CloudifyPaaS, as shown in figure 4. Cloudify uses TOSCA (Topology and Orchestration Specification for Cloud Applications) that enhances the cloud applications portability and services.

The combination of development and architecture support with cluster solutions provide the management solution. The solution for the management of the distributed software applications in the cloud is provided by Multi-PaaS based on container clusters, though the technology faces some challenges. The challenges include the lack of formal descriptions that can be seen as suitable or metadata that are user-defined for the containers that go beyond the tagging of image with simple IDs (Ibryam & Huß, 2019). There was need to extend description of mechanisms to clusters of containers and their orchestration as well. From figure 2 above, there is need to specify topology of distributed container architectures and its deployment and execution orchestrated. The orchestration problems currently lack solution that is widely accepted; as a result, Docker has begun to develop orchestration solution making Kubernetes a relevant project and a comprehensive solution (Vohra, 2017). Topology and Orchestration Specification for Cloud Applications offer support to multiple features which include the interoperable application and infrastructure cloud services description where the implementation is as containers hosted on nodes in an edge cloud. Another significant feature is the relationships between the service parts and the behavioral operational of these services.

Review of the Candidate Technologies

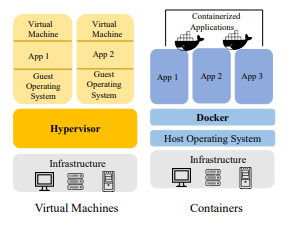

There has been a shift from traditional virtualization to solutions of virtualization that are based on the container. The new virtualization solutions have gained momentum, especially in recent years following the increased ability of the containers to utilize kernel features that create an isolated environment of the process of running (Hoque et al., 2017). There has been further use of hardware of the host system, and unlike hypervisor, the virtualized hardware is not used. A comparison of the various candidate technologies is provided between Docker, container orchestration, and Kubernetes.

Docker

Docker offers standardized solutions as an open-source project to facilitate the easiness of the implementation of Linux applications within portable containers. LXC and OpenVZ are among the variety of system-level container services Saxena (2020). However, Docker is the chosen technology because it is application-oriented and is the most appropriate with the micro-services environment like the IoT. The building blocks of Docker include the docker containers, which are built from base images (Hoque et al., 2017). The images are able to create the containers since they act as a template, and the applications are used to configure. For every change in the image, the docker hub shares with a team like a layer in git while the Dockerfiles holding a series of instructions are used in executing commands in Docker automatically or manually. Figure 3 shows a model of Docker container

The containers of the Docker are linked to each other where they form a tiered application and can be started, stopped, and terminated. The containers are able to interact with a Docker daemon through CLI or Representational State Transfer (REST) API’S. The use of lightweight virtualization technique is mainly because it has features such as rapid application deployment, versioning of images in docker along with minimal overhaed, portability, and the ease in the maintenance which helps to build Platform as a Service (PaaS).

Container Orchestration

The feasibility of running applications that are containerized over the many hosts in various clouds is expedited following the containerization in Docker. The need for the operation of multiple containers in various hosts and clouds is enabled by the cluster architecture in containers (Mahboob & Coffman, 2021). It is possible to cluster and control different hosts holding the same docker containers. The same base images logically create the typical applications residing in clusters which make it easier to replicate among multiple hosts. In Docker, cluster-based containerization creates the opportunity to bridge the gap between the clusters and their management. The platform of the cluster orchestration monitors the scaling and facilitates the balancing of load among other containers’ services which reside in many hosts. It has to support the discovery of scalable and the containers orchestration and provision of the communication within the clusters. Kubernetes is used as one of the available orchestration platforms for monitoring and the management of IoT applications.

Kubernetes

Kubernetes is a multi-host container management platform that applies a master in the management of Docker containers over many hosts. An orchestration platform is needed in the clusters while Kubernetes are able to monitor the running of applications dynamically in containers and perform the provisioning of resources along with auto-scaling support with the features that are built-in.

The nodes which reside in different IoT application containers are invigilated through the exploitation of this feature of Kubernetes in the environmental scenario. Grouped containers are held by the basic deployment units of the pods to allow the master to assign each pod a virtual IP (Hoque et al., 2017). The pods are monitored by Kubelet that is a node agent, as it reports the status of the pod, its utilization of resource, and the master’s event. An API manager, storage component, a scheduler, and the controller manager are controlled by the Kubernetes (Mahboob & Coffman, 2021). The namespaces are provided by Kubernetes separately to enable the partitioning of each application and prevent them from affecting each other.

Reference List

Al-Dhuraibi, Y., Paraiso, F., Djarallah, N. and Merle, P., 2017. Elasticity in cloud computing: state of the art and research challenges. IEEE Transactions on Services Computing, 11(2), pp.430-447.

Dupont, C., Giaffreda, R. and Capra, L., 2017, Edge computing in IoT context: Horizontal and vertical Linux container migration. In 2017 Global Internet of Things Summit (GIoTS) (pp. 1-4). IEEE.

Englund, C., 2017. Evaluation of cloud-based infrastructures for scalable applications, pp 1-47

Hoque, S., De Brito, M.S., Willner, A., Keil, O. and Magedanz, T., 2017. Towards container orchestration in fog computing infrastructures. In 2017 IEEE 41st Annual Computer Software and Applications Conference (COMPSAC) (Vol. 2, pp. 294-299). IEEE.

Madhuri, T. and Sowjanya, P., 2016. Microsoft Azure v/s Amazon AWS cloud services: a comparative study. International Journal of Innovative Research in Science, Engineering and Technology, 5(3), pp.3904-3907. Web.

Mitchell, N.J. and Zunnurhain, K., 2019. Google cloud platform security. In Proceedings of the 4th ACM/IEEE Symposium on Edge Computing (pp. 319-322). Web.

Muralidharan, S., Song, G. and Ko, H., 2019. Monitoring and managing iot applications in smart cities using kubernetes. CLOUD COMPUTING, 11.

Pahl, C. and Lee, B., 2015, August. Containers and clusters for edge cloud architectures–a technology review. In 2015 3rd international conference on future internet of things and cloud (pp. 379-386). IEEE.

Pahl, C., 2015. Containerization and the paas cloud. IEEE Cloud Computing, 2(3), pp.24-31.

Radek, S., 2020. Continuous integration and application deployment with the Kubernetes technology, pp 1-51

Saxena, D., 2020. Security analysis of linux containers over cloud computing infrastructure.

Sayfan, G., 2017. Mastering kubernetes. Packt Publishing Ltd., pp 1-385.

Sultan, S., Ahmad, I. and Dimitriou, T., 2019. Container security: Issues, challenges, and the road ahead. IEEE Access, 7, pp.52976-52996.

Thurgood, B. and Lennon, R.G., 2019, July. Cloud computing with Kubernetes cluster elastic scaling. In Proceedings of the 3rd International Conference on Future Networks and Distributed Systems (pp. 1-7).

Zhou, N., Georgiou, Y., Pospieszny, M., Zhong, L., Zhou, H., Niethammer, C., Pejak, B., Marko, O. and Hoppe, D., 2021. Container orchestration on HPC systems through Kubernetes. Journal of Cloud Computing, 10(1), pp.1-14. Web.